Welcome to the Diplomats in Germany project. This instructions will guide you through the setup process.

Most steps are automated to make the setup as easy as possible.

The septup will have the following steps:

- create Google Cloud project

- authorize APIs and billing

- set preferred cloud location

- create service account for Terraform

- create infrastructure with Terraform

- setup Airflow

- run data pipeline

- view the Google Data Studio report

- remove project infrastructure

It costs approx. $1 credit to run the project for an hour.

Note: If you have closed this instructions pane and want to reopen it, run the following command in the Cloud Shell terminal window:

cloudshell launch-tutorial project-walkthrough.md

Click on Start to open the instructions for creating a new project.

There are two options to create a Google Cloud project:

- Option 1: Command Line

- Option 2: Graphical User Interface

Proceed with one of both.

(Choose either option 1 or option 2)

Tip: If you have opened the instructions in Google Cloud Shell, you could click on the grey Cloud Shell icon at the top right corner of the shell command to transfer the code to the Cloud Shell terminal window, then press enter in the terminal window to execute the command.

- Generate a name for your project with a random id:

PROJECT_ID="diplomats-in-germany-$(shuf -i 100000-999999 -n 1)"

- Create the project with the generated name (Note: You might need to authorise the terminal session):

gcloud projects create $PROJECT_ID --name="Diplomats in Germany"

- Activate the created project in Cloud Shell (this will help us accessing the project ressources via the terminal window and saves the project id in the variable $GOOGLE_CLOUD_PROJECT):

gcloud config set project $PROJECT_ID

Note: If you want to reopen your project, because the Cloud Shell session has timed out, you can use the following command:

PROJECT_ID=$(gcloud projects list --filter='diplomats-in-germany' --limit=1 --format='value(projectId)') gcloud config set project $PROJECT_ID

Click Next to start authorizing the necessary APIs for this project.

(Choose either option 1 or option 2)

Click on create a new project to create a new Google Cloud project. Select the created project afterwards in the dropdown field below.

Project ID:

Project Name:

Click Next to start authorizing the necessary APIs for this project.

Execute the following command to authorize these three APIs for the project:

- bigquery

- storage

- compute engine

gcloud services enable bigquery.googleapis.com storage-component.googleapis.com compute.googleapis.comNote: If there is an error message, that a billing account is missing for this new project, follow these additional steps:

- Go to the Google Cloud Platform billing config.

- Click on the three dots next to the new project and select "Change billing".

- Choose the billing account and confirm by clicking "Set account".

- Execute the shell command above again to enable the APIs.

Click Next configure the cloud location.

Execute the following command to set a region as cloud location for this project:

gcloud config set compute/region europe-west1Note: The region will be set to

europe-west1. If you would like to use another location, choose your preferred region and change it in the shell command above.

Execute the following command to set a corresponding zone to the selected region above:

gcloud config set compute/zone "$(gcloud config get compute/region)-b"Note: The zone will be set to

europe-west1-b. If you would like to use another zone, change the letterbto your preferred zone letter. All available zones for a region could be found here.

Execute the following command to store the selected region and zone in variables for further usage in this walkthrough:

GOOGLE_CLOUD_REGION=$(gcloud config get compute/region)

GOOGLE_CLOUD_ZONE=$(gcloud config get compute/zone)

Click Next to configure a service account for Terraform.

Create service account for Terraform and store the full account name in a variable for further use:

gcloud iam service-accounts create svc-terraform --display-name="Terraform Service Account" --project=$GOOGLE_CLOUD_PROJECT

GCP_SA_MAIL="svc-terraform@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com"

The service account for Terraform needs three roles:

- roles/bigquery.admin

- roles/storage.admin

- roles/compute.admin

Add these roles to the service account:

gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT --member="serviceAccount:$GCP_SA_MAIL" --role='roles/bigquery.admin'

gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT --member="serviceAccount:$GCP_SA_MAIL" --role='roles/storage.admin'

gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT --member="serviceAccount:$GCP_SA_MAIL" --role='roles/compute.admin'

Create API keys and save them in credentials/terraform-gcp-key.json in Google Cloud Shell:

gcloud iam service-accounts keys create credentials/terraform-gcp-key.json --iam-account=$GCP_SA_MAILNote: If you would like to save the API keys on your local computer as well execute the following command:

cloudshell download credentials/terraform-gcp-key.json

Click Next to start creating the cloud infrastructure.

It is a good practice to store the Terraform state file on a remote storage, in order to version the state description of the infrastructure, to prevent data loss and to give other members of a team the opportunity to change the infrastructure as well.

Set a name for the bucket, in which the Terraform remote state file will be stored:

TF_BUCKET_NAME = "$GOOGLE_CLOUD_PROJECT-tf-state"Execute the following command to create a bucket for the Terraform remote state file:

gsutil mb -p $GOOGLE_CLOUD_PROJECT -c STANDARD -l $GOOGLE_CLOUD_REGION gs://$TF_BUCKET_NAME

Enable object versioning on the bucket to track changes in the state file and therefore document infrastructure changes:

gsutil versioning set on gs://$GOOGLE_CLOUD_PROJECT-tf-state

Change directory

cd setup

Create SSH keys for the Google Compute Engine:

ssh-keygen -f ~/.ssh/id_rsa -N ""

Initialize the remote state file (.tfstate):

terraform init -backend-config="bucket=$TF_BUCKET_NAME"

Create an execution plan to build the defined infrastructure:

terraform plan -var="project=$GOOGLE_CLOUD_PROJECT" -var="region=$GOOGLE_CLOUD_REGION" -var="zone=$GOOGLE_CLOUD_ZONE"

Execute the plan and build the infrastructure (it might take a couple of minutes to finish it):

terraform apply -auto-approve -var="project=$GOOGLE_CLOUD_PROJECT" -var="region=$GOOGLE_CLOUD_REGION" -var="zone=$GOOGLE_CLOUD_ZONE"Now you have created the necessary infrastructure.

Click Next to setup Airflow.

Get IP address of compute engine:

IP_ADDRESS="$(gcloud compute instances describe airflow-host --format='get(networkInterfaces[0].accessConfigs[0].natIP)')"

Insert current project settings in environment file .env:

cp ../airflow/template.env ../airflow/.env

sed -i "s/^\(GCP_PROJECT_ID=\).*$/\1$GOOGLE_CLOUD_PROJECT/gm" ../airflow/.env

sed -i "s/^\(GCP_GCS_BUCKET=\).*$/\1$GOOGLE_CLOUD_PROJECT-$GOOGLE_CLOUD_REGION-data-lake/gm" ../airflow/.env

sed -i "s/^\(GCP_LOCATION=\).*$/\1$GOOGLE_CLOUD_REGION/gm" ../airflow/.env

Create target folders and transfer data to the VM:

ssh -o StrictHostKeyChecking=no local@$IP_ADDRESS "mkdir -p ~/app/airflow ~/app/dbt ~/app/credentials"

scp -r ../airflow ../dbt ../credentials local@$IP_ADDRESS:~/app

ssh local@$IP_ADDRESS "chmod o+r ~/app/credentials/* && chmod o+w ~/app/dbt"

Connect to the VM:

ssh local@$IP_ADDRESS

Install Docker and Docker Compose:

sudo apt update

sudo apt install -y apt-transport-https ca-certificates curl software-properties-common gnupg lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt update

sudo apt-get -y install docker-ce docker-ce-cli containerd.io

sudo curl -L "https://github.com/docker/compose/releases/download/v2.4.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

sudo systemctl start docker

sudo usermod -aG docker $USER

newgrp docker

Build and start the airflow containers:

cd app/airflow

/usr/local/bin/docker-compose build

/usr/local/bin/docker-compose up airflow-init

/usr/local/bin/docker-compose up -d

Click Next to run the data pipeline in airflow.

Note: If you are not connected to

local@airflow-host, execute the following command:IP_ADDRESS="$(gcloud compute instances describe airflow-host --format='get(networkInterfaces[0].accessConfigs[0].natIP)')" ssh local@$IP_ADDRESS

Connect to airflow sheduler:

docker exec -it diplomats-airflow-scheduler-1 bash

Start dag by unpausing it:

airflow dags unpause ingest_diplomats_dag

Use this command to see the dag's current status: (you might need to wait a bit until the dag is completed and refresh the command to see the new status)

airflow tasks states-for-dag-run ingest_diplomats_dag $(date -d "yesterday" '+%Y-%m-%d') If the state of every task in the dag shows success, the run is completed. The dag is sheduled to run daily and will check, whether a new version of the PDF list with diplomats was published online. If a new version is found, the data will be extracted, versioned and added to the BigQuery tables.

Close the connection to the airflow sheduler container and return to the airflow host:

exit

Close the connection to the airflow host and return to cloud shell

exit

Click Next to see the results

If you open BigQuery, you will see five tables in the datamart dataset. These tables contain the data for the Data Studio Report

bq ls --max_results 10 "$GOOGLE_CLOUD_PROJECT:datamart"

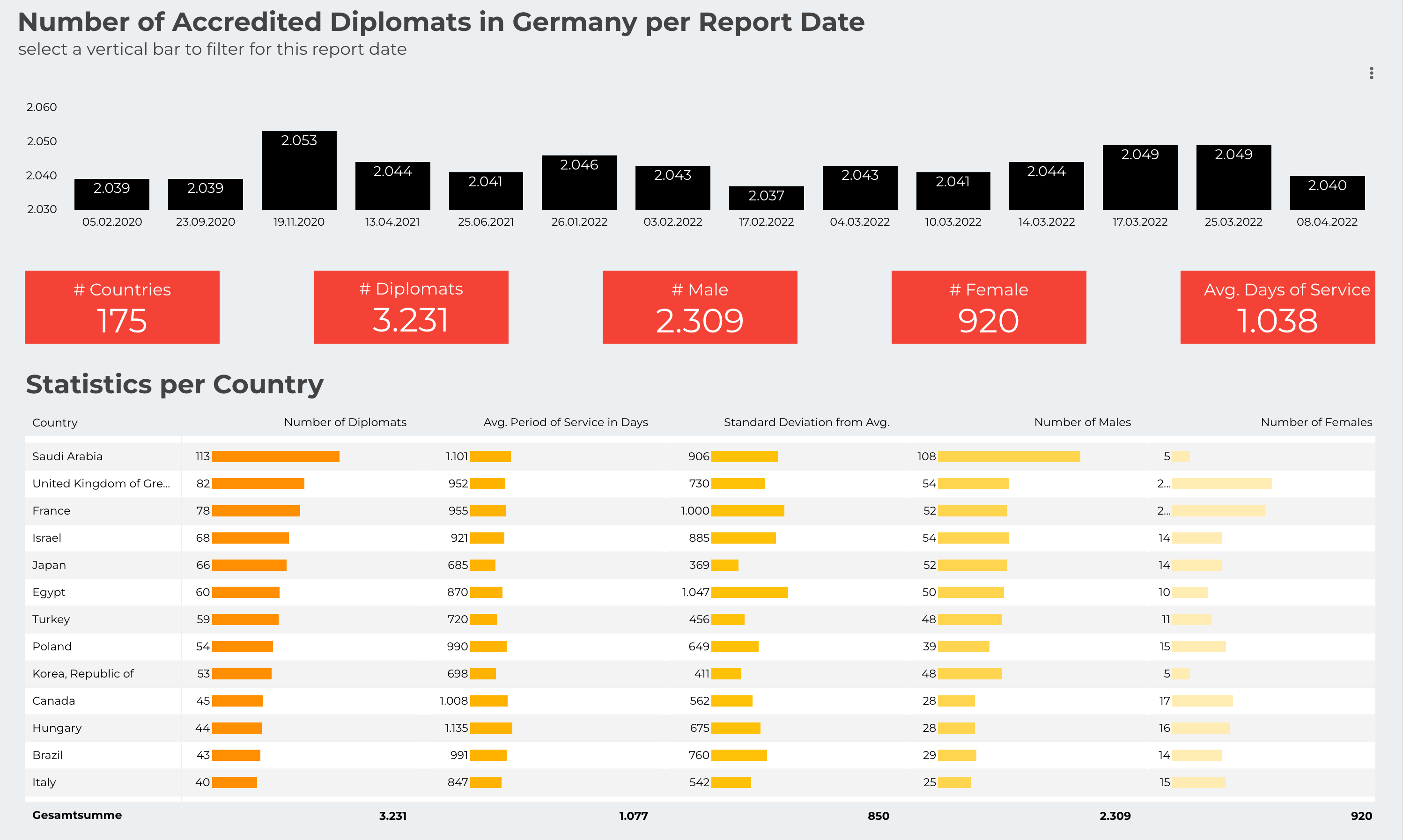

Open the Report using the following link to see how many diplomats from other countries are acredited in Germany at the moment, how many of them are male and female and how long are they staying on post on average: https://datastudio.google.com/reporting/c67883ee-7b3a-481f-a28f-e001b0c3c743

You went through the setup, ran the data pipeline and have seen the result.

Click Next to clean up the project and remove the infrastructure.

- Remove infrastructure using Terraform:

cd ~/cloudshell_open/diplomats-in-germany/setup

terraform destroy -auto-approve -var="project=$GOOGLE_CLOUD_PROJECT" -var="region=$GOOGLE_CLOUD_REGION" -var="zone=$GOOGLE_CLOUD_ZONE"

- Delete Google Cloud project and type

Ywhen you will get prompted to confirm the deletion (Note: The following command is going to delete the currently active project in Cloud Shell. Useecho $GOOGLE_CLOUD_PROJECTto review, which project is active):

gcloud projects delete $GOOGLE_CLOUD_PROJECTIf you feel uncertain, you could delete the project from Google Cloud manually: Visit Ressource Manager

- Remove cloned project repository from your persistent Google Cloud Shell storage:

cd ~/cloudshell_open

rm -rf diplomats-in-germany

Click Next to complete the walkthrough.

You went through the whole project, from setup, over running the data pipelines, to using the resulting dashboard. I hope you have liked the project.

Do not forget to remove the infrastructure, if you have not done so already.

This project is my capstone of DataTalksClub's highly recommended Data Engineering Zoomcamp. Pay a visit to them, they are amazing!