中文 | 한국어 | 日本語 | Русский | Deutsch | Français | Español | Português | Türkçe | Tiếng Việt | العربية

Ultralytics YOLOv3 🚀 is a significant iteration in the YOLO (You Only Look Once) family of real-time object detection models. Originally developed by Joseph Redmon, YOLOv3 improved upon its predecessors by enhancing accuracy, particularly for smaller objects, through techniques like multi-scale predictions and a more complex backbone network (Darknet-53). This repository represents Ultralytics' implementation, building upon the foundational work and incorporating best practices learned through extensive research in computer vision and deep learning.

We hope the resources here help you leverage YOLOv3 effectively. Explore the Ultralytics YOLOv3 Docs for detailed guides, raise issues on GitHub for support, and join our vibrant Discord community for questions and discussions!

To request an Enterprise License for commercial use, please complete the form at Ultralytics Licensing.

We are thrilled to announce the release of Ultralytics YOLO11 🚀, the next generation in state-of-the-art (SOTA) vision models! Now available at the main Ultralytics repository, YOLO11 continues our commitment to speed, accuracy, and user-friendliness. Whether your focus is object detection, image segmentation, or image classification, YOLO11 offers unparalleled performance and versatility for a wide range of applications.

Start exploring YOLO11 today! Visit the Ultralytics Docs for comprehensive guides and resources.

pip install ultralyticsSee the Ultralytics YOLOv3 Docs for full documentation on training, validation, inference, and deployment. Quickstart examples are provided below.

Install

Clone the repository and install dependencies using requirements.txt in a Python>=3.7.0 environment. Ensure you have PyTorch>=1.7.

git clone https://github.com/ultralytics/yolov3 # clone repository

cd yolov3

pip install -r requirements.txt # install dependenciesInference

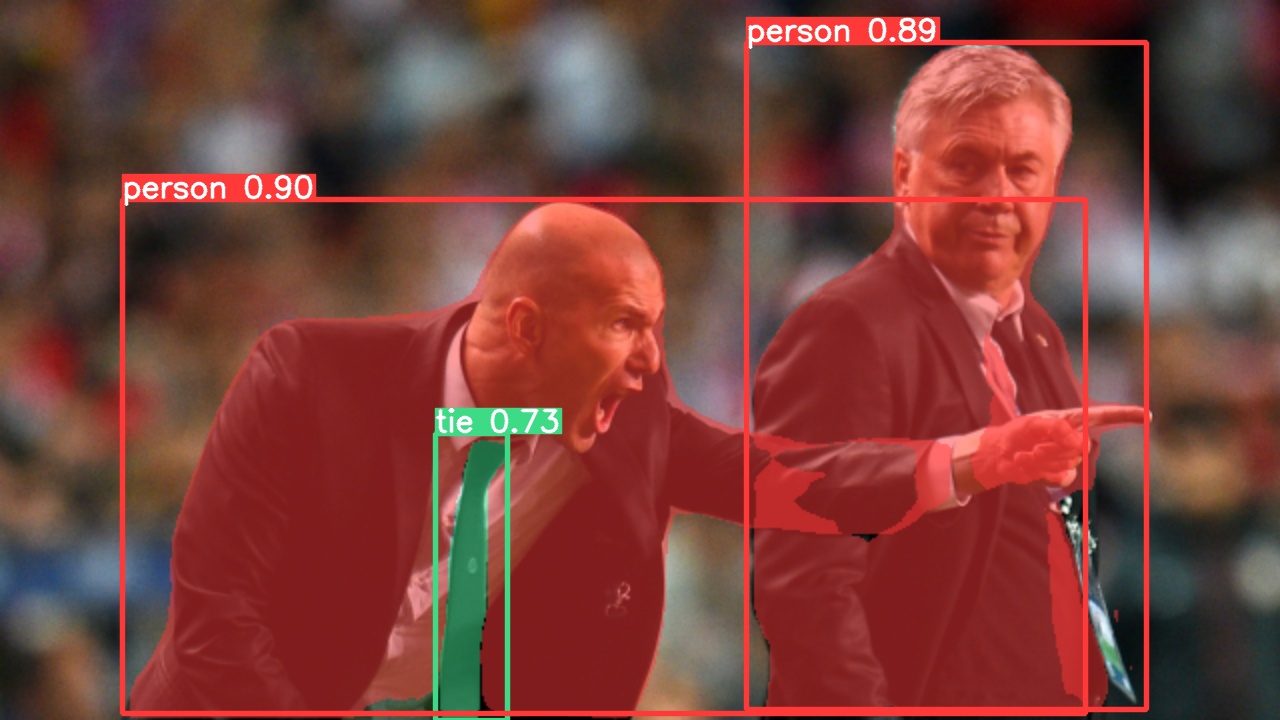

Perform inference using YOLOv3 models loaded via PyTorch Hub. Models are automatically downloaded from the latest YOLOv3 release.

import torch

# Load a YOLOv3 model (e.g., yolov3, yolov3-spp)

model = torch.hub.load("ultralytics/yolov3", "yolov3", pretrained=True) # specify 'yolov3' or other variants

# Define image source (URL, file path, PIL image, OpenCV image, numpy array, list of images)

img_source = "https://ultralytics.com/images/zidane.jpg"

# Perform inference

results = model(img_source)

# Process and display results

results.print() # Print results to console

# results.show() # Display results in a window

# results.save() # Save results to runs/detect/exp

# results.crop() # Save cropped detections

# results.pandas().xyxy[0] # Access results as pandas DataFrameInference with detect.py

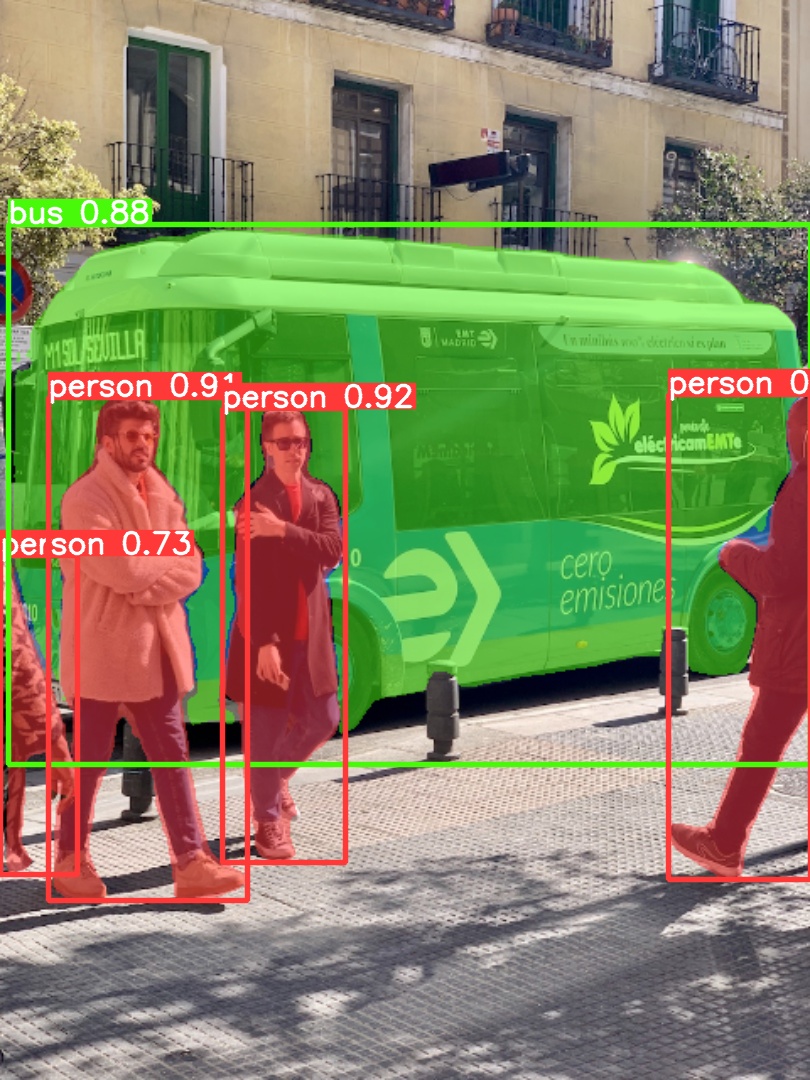

The detect.py script runs inference on various sources. It automatically downloads required models from the latest YOLOv3 release and saves the output to runs/detect.

# Run inference using detect.py with different sources

python detect.py --weights yolov3.pt --source 0 # Webcam

python detect.py --weights yolov3.pt --source image.jpg # Single image

python detect.py --weights yolov3.pt --source video.mp4 # Video file

python detect.py --weights yolov3.pt --source screen # Screen capture

python detect.py --weights yolov3.pt --source path/ # Directory of images/videos

python detect.py --weights yolov3.pt --source list.txt # Text file with image paths

python detect.py --weights yolov3.pt --source list.streams # Text file with stream URLs

python detect.py --weights yolov3.pt --source 'path/*.jpg' # Glob pattern for images

python detect.py --weights yolov3.pt --source 'https://youtu.be/LNwODJXcvt4' # YouTube video URL

python detect.py --weights yolov3.pt --source 'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream URLTraining

The following command demonstrates training YOLOv3 on the COCO dataset. Models and datasets are automatically downloaded from the latest YOLOv3 release. Training times vary depending on the model size and hardware; for instance, YOLOv5 variants (often used as a reference) take 1-8 days on a V100 GPU. Use the largest possible --batch-size or utilize --batch-size -1 for YOLOv3 AutoBatch. Batch sizes shown are indicative for a V100-16GB GPU.

# Train YOLOv3 on COCO dataset for 300 epochs

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov3.yaml --batch-size 128

📚 Tutorials

- Train Custom Data 🚀 RECOMMENDED: Learn how to train YOLO models on your own datasets.

- Tips for Best Training Results ☘️: Improve your model's performance with expert tips.

- Multi-GPU Training: Scale your training across multiple GPUs.

- PyTorch Hub Integration: Load models easily using PyTorch Hub. 🌟 NEW

- Model Export: Export models to various formats like TFLite, ONNX, CoreML, TensorRT. 🚀

- NVIDIA Jetson Deployment: Deploy models on NVIDIA Jetson devices. 🌟 NEW

- Test-Time Augmentation (TTA): Enhance prediction accuracy using TTA.

- Model Ensembling: Combine multiple models for better robustness.

- Model Pruning/Sparsity: Optimize models for size and speed.

- Hyperparameter Evolution: Automatically tune hyperparameters for optimal performance.

- Transfer Learning: Fine-tune pretrained models on your custom data.

- Architecture Summary: Understand the underlying model architecture. 🌟 NEW

- Ultralytics HUB Training 🚀 RECOMMENDED: Train and deploy YOLO models easily using Ultralytics HUB.

- ClearML Logging: Integrate experiment tracking with ClearML.

- Neural Magic DeepSparse Integration: Accelerate inference with DeepSparse.

- Comet Logging: Log and visualize experiments using Comet. 🌟 NEW

Our key integrations with leading AI platforms extend Ultralytics' capabilities, enhancing tasks like dataset labeling, training, visualization, and model management. Explore how Ultralytics works with Weights & Biases, Comet ML, Roboflow, and Intel OpenVINO to streamline your AI workflow.

| Ultralytics HUB 🚀 | W&B | Comet ⭐ NEW | Neural Magic |

|---|---|---|---|

| Streamline YOLO workflows: Label, train, and deploy effortlessly with Ultralytics HUB. Try now! | Track experiments, hyperparameters, and results with Weights & Biases. | Free forever, Comet lets you save YOLO models, resume training, and interactively visualize and debug predictions. | Run YOLO inference up to 6x faster with Neural Magic DeepSparse. |

Experience seamless AI development with Ultralytics HUB ⭐, your all-in-one platform for data visualization, YOLO 🚀 model training, and deployment—no coding required. Convert images into actionable insights and realize your AI projects effortlessly using our advanced platform and intuitive Ultralytics App. Begin your journey for Free today!

YOLOv3 marked a significant step in the evolution of real-time object detectors. Its key contributions include:

- Multi-Scale Predictions: Detecting objects at three different scales using feature pyramids, improving accuracy for objects of varying sizes, especially small ones.

- Improved Backbone: Utilizing Darknet-53, a deeper and more complex network than its predecessor (Darknet-19), enhancing feature extraction capabilities.

- Class Prediction: Using logistic classifiers instead of softmax for class predictions, allowing for multi-label classification where an object can belong to multiple categories.

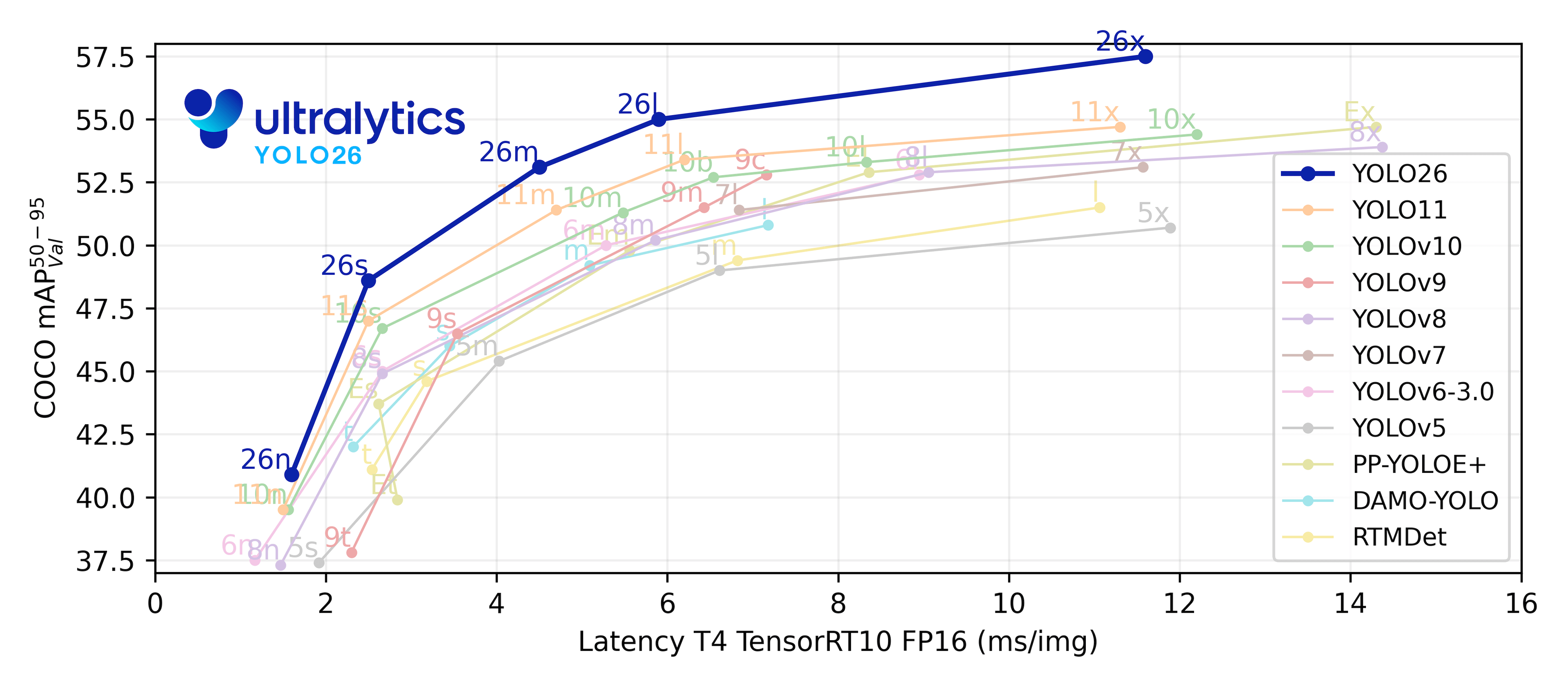

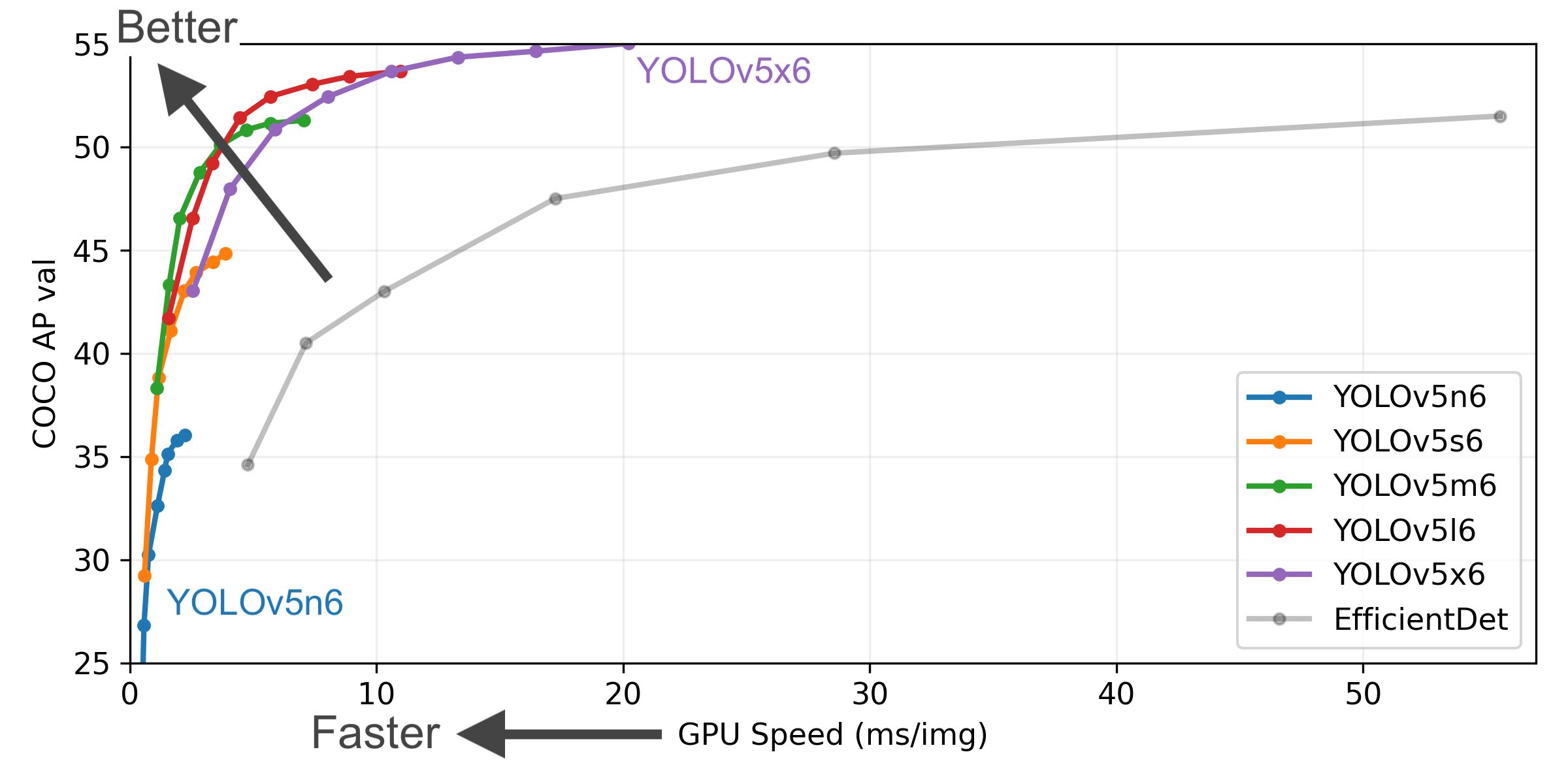

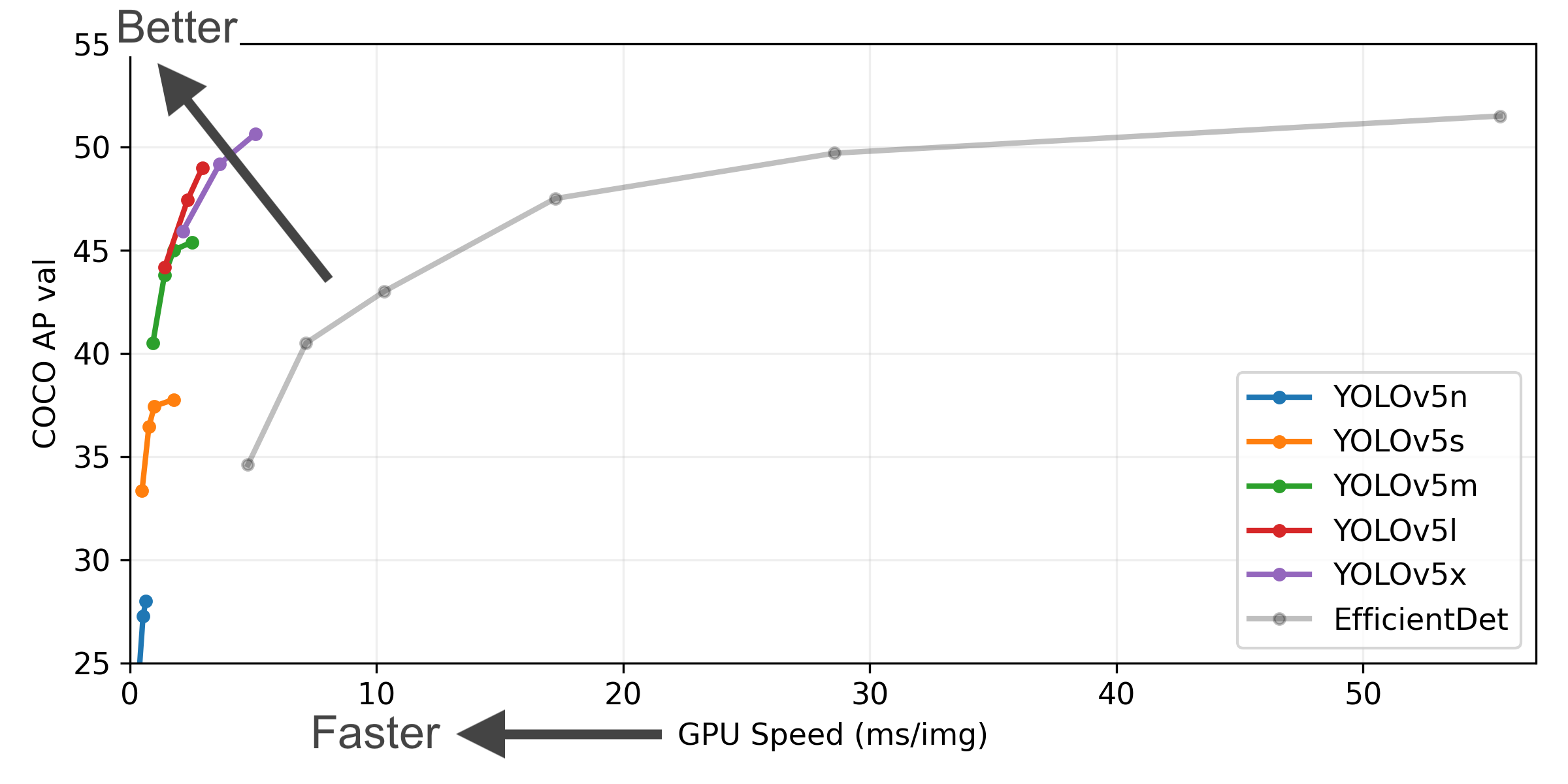

While newer models like YOLOv5 and YOLOv8 offer further improvements in speed and accuracy, YOLOv3 remains a foundational model in the field and is still widely used and studied. The table below shows comparisons with later YOLOv5 models for context.

Figure Notes

- COCO AP val denotes mAP@0.5:0.95 metric measured on the 5000-image COCO val2017 dataset over various inference sizes from 256 to 1536. See COCO dataset.

- GPU Speed measures average inference time per image on the COCO val2017 dataset using an AWS p3.2xlarge V100 instance at batch-size 32.

- EfficientDet data from google/automl repository at batch size 8.

- Reproduce benchmark results using

python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt

This table shows YOLOv5 models trained on the COCO dataset, often used as benchmarks.

| Model | size (pixels) |

mAPval 50-95 |

mAPval 50 |

Speed CPU b1 (ms) |

Speed V100 b1 (ms) |

Speed V100 b32 (ms) |

params (M) |

FLOPs @640 (B) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5n | 640 | 28.0 | 45.7 | 45 | 6.3 | 0.6 | 1.9 | 4.5 |

| YOLOv5s | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 |

| YOLOv5m | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 |

| YOLOv5l | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 |

| YOLOv5x | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 |

| YOLOv5n6 | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |

| YOLOv5s6 | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |

| YOLOv5m6 | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |

| YOLOv5l6 | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |

| YOLOv5x6 + [TTA] |

1280 1536 |

55.0 55.8 |

72.7 72.7 |

3136 - |

26.2 - |

19.4 - |

140.7 - |

209.8 - |

Table Notes

- All YOLOv5 checkpoints shown are trained to 300 epochs with default settings. Nano and Small models use

hyp.scratch-low.yamlhyperparameters, others usehyp.scratch-high.yaml. See Hyperparameter Tuning Guide. - mAPval values are for single-model single-scale evaluation on the COCO val2017 dataset.

Reproduce usingpython val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65. - Speed metrics averaged over COCO val images using an AWS p3.2xlarge instance. NMS times (~1 ms/img) are not included.

Reproduce usingpython val.py --data coco.yaml --img 640 --task speed --batch 1. - TTA (Test Time Augmentation) includes reflection and scale augmentations.

Reproduce usingpython val.py --data coco.yaml --img 1536 --iou 0.7 --augment.

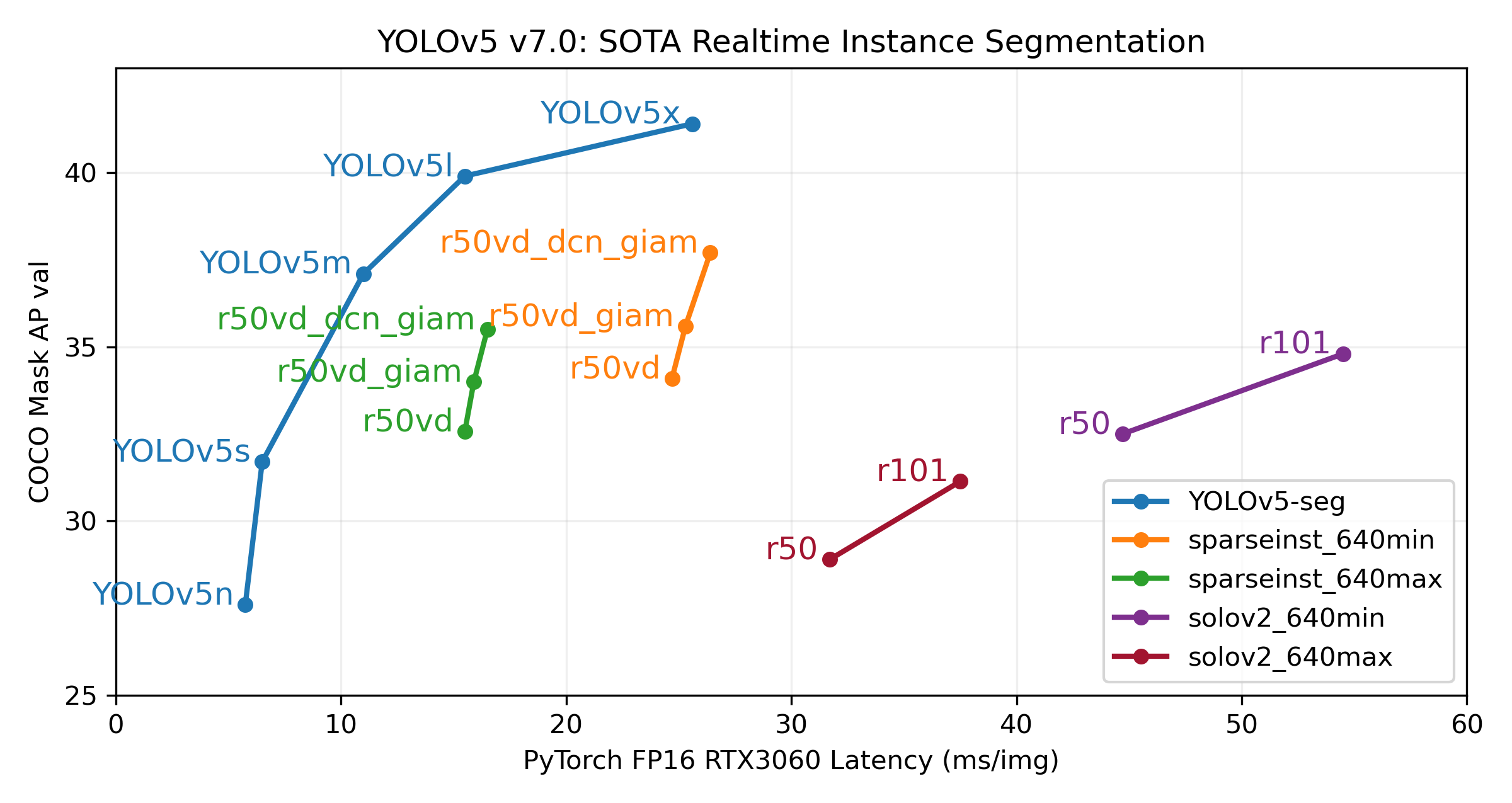

While YOLOv3 primarily focused on object detection, later Ultralytics models like YOLOv5 introduced instance segmentation capabilities. The YOLOv5 release v7.0 included segmentation models that achieved state-of-the-art performance. These models are easy to train, validate, and deploy. See the YOLOv5 Release Notes and the YOLOv5 Segmentation Colab Notebook for more details and tutorials.

Segmentation Checkpoints (YOLOv5)

YOLOv5 segmentation models were trained on the COCO-segments dataset for 300 epochs at an image size of 640 using A100 GPUs. Models were exported to ONNX FP32 for CPU speed tests and TensorRT FP16 for GPU speed tests on Google Colab Pro.

| Model | size (pixels) |

mAPbox 50-95 |

mAPmask 50-95 |

Train time 300 epochs A100 (hours) |

Speed ONNX CPU (ms) |

Speed TRT A100 (ms) |

params (M) |

FLOPs @640 (B) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5n-seg | 640 | 27.6 | 23.4 | 80:17 | 62.7 | 1.2 | 2.0 | 7.1 |

| YOLOv5s-seg | 640 | 37.6 | 31.7 | 88:16 | 173.3 | 1.4 | 7.6 | 26.4 |

| YOLOv5m-seg | 640 | 45.0 | 37.1 | 108:36 | 427.0 | 2.2 | 22.0 | 70.8 |

| YOLOv5l-seg | 640 | 49.0 | 39.9 | 66:43 (2x) | 857.4 | 2.9 | 47.9 | 147.7 |

| YOLOv5x-seg | 640 | 50.7 | 41.4 | 62:56 (3x) | 1579.2 | 4.5 | 88.8 | 265.7 |

- All checkpoints trained for 300 epochs with SGD optimizer (

lr0=0.01,weight_decay=5e-5) at image size 640 using default settings. Runs logged at W&B YOLOv5_v70_official. - Accuracy values are for single-model, single-scale on the COCO dataset.

Reproduce withpython segment/val.py --data coco.yaml --weights yolov5s-seg.pt. - Speed averaged over 100 inference images on a Colab Pro A100 High-RAM instance (inference only, NMS adds ~1ms/image).

Reproduce withpython segment/val.py --data coco.yaml --weights yolov5s-seg.pt --batch 1. - Export to ONNX (FP32) and TensorRT (FP16) using

export.py.

Reproduce withpython export.py --weights yolov5s-seg.pt --include engine --device 0 --half.

Segmentation Usage Examples (YOLOv5)

Train YOLOv5 segmentation models. Use --data coco128-seg.yaml for auto-download or manually download COCO-segments with bash data/scripts/get_coco.sh --train --val --segments then use --data coco.yaml.

# Single-GPU Training

python segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640

# Multi-GPU DDP Training

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640 --device 0,1,2,3Validate YOLOv5s-seg mask mAP on the COCO dataset:

# Download COCO val segments split (780MB, 5000 images)

bash data/scripts/get_coco.sh --val --segments

# Validate performance

python segment/val.py --weights yolov5s-seg.pt --data coco.yaml --img 640Use a pretrained YOLOv5m-seg model for prediction:

# Predict objects and masks in an image

python segment/predict.py --weights yolov5m-seg.pt --data data/images/bus.jpg# Load model via PyTorch Hub (Note: Segmentation inference might require specific handling)

# model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5m-seg.pt") |

|

|---|

Export a YOLOv5s-seg model to ONNX and TensorRT formats:

# Export model for deployment

python export.py --weights yolov5s-seg.pt --include onnx engine --img 640 --device 0Similar to segmentation, image classification capabilities were formally introduced in later Ultralytics YOLO versions, specifically YOLOv5 release v6.2. These models allow for training, validation, and deployment for classification tasks. Check the YOLOv5 Release Notes and the YOLOv5 Classification Colab Notebook for detailed information and examples.

Classification Checkpoints (YOLOv5 & Others)

YOLOv5-cls models were trained on ImageNet for 90 epochs using a 4xA100 instance. ResNet and EfficientNet models were trained alongside for comparison using the same settings. Models were exported to ONNX FP32 (CPU speed) and TensorRT FP16 (GPU speed) and tested on Google Colab Pro.

| Model | size (pixels) |

acc top1 |

acc top5 |

Training 90 epochs 4xA100 (hours) |

Speed ONNX CPU (ms) |

Speed TensorRT V100 (ms) |

params (M) |

FLOPs @224 (B) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5n-cls | 224 | 64.6 | 85.4 | 7:59 | 3.3 | 0.5 | 2.5 | 0.5 |

| YOLOv5s-cls | 224 | 71.5 | 90.2 | 8:09 | 6.6 | 0.6 | 5.4 | 1.4 |

| YOLOv5m-cls | 224 | 75.9 | 92.9 | 10:06 | 15.5 | 0.9 | 12.9 | 3.9 |

| YOLOv5l-cls | 224 | 78.0 | 94.0 | 11:56 | 26.9 | 1.4 | 26.5 | 8.5 |

| YOLOv5x-cls | 224 | 79.0 | 94.4 | 15:04 | 54.3 | 1.8 | 48.1 | 15.9 |

| ResNet18 | 224 | 70.3 | 89.5 | 6:47 | 11.2 | 0.5 | 11.7 | 3.7 |

| ResNet34 | 224 | 73.9 | 91.8 | 8:33 | 20.6 | 0.9 | 21.8 | 7.4 |

| ResNet50 | 224 | 76.8 | 93.4 | 11:10 | 23.4 | 1.0 | 25.6 | 8.5 |

| ResNet101 | 224 | 78.5 | 94.3 | 17:10 | 42.1 | 1.9 | 44.5 | 15.9 |

| EfficientNet_b0 | 224 | 75.1 | 92.4 | 13:03 | 12.5 | 1.3 | 5.3 | 1.0 |

| EfficientNet_b1 | 224 | 76.4 | 93.2 | 17:04 | 14.9 | 1.6 | 7.8 | 1.5 |

| EfficientNet_b2 | 224 | 76.6 | 93.4 | 17:10 | 15.9 | 1.6 | 9.1 | 1.7 |

| EfficientNet_b3 | 224 | 77.7 | 94.0 | 19:19 | 18.9 | 1.9 | 12.2 | 2.4 |

Table Notes (click to expand)

- All checkpoints trained for 90 epochs with SGD optimizer (

lr0=0.001,weight_decay=5e-5) at image size 224 using default settings. Runs logged at W&B YOLOv5-Classifier-v6-2. - Accuracy values are for single-model, single-scale on the ImageNet-1k dataset.

Reproduce withpython classify/val.py --data ../datasets/imagenet --img 224. - Speed averaged over 100 inference images using a Google Colab Pro V100 High-RAM instance.

Reproduce withpython classify/val.py --data ../datasets/imagenet --img 224 --batch 1. - Export to ONNX (FP32) and TensorRT (FP16) using

export.py.

Reproduce withpython export.py --weights yolov5s-cls.pt --include engine onnx --imgsz 224.

Classification Usage Examples (YOLOv5)

Train YOLOv5 classification models. Datasets like MNIST, Fashion-MNIST, CIFAR10, CIFAR100, Imagenette, Imagewoof, and ImageNet can be auto-downloaded using the --data argument (e.g., --data mnist).

# Single-GPU Training on CIFAR-100

python classify/train.py --model yolov5s-cls.pt --data cifar100 --epochs 5 --img 224 --batch 128

# Multi-GPU DDP Training on ImageNet

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 classify/train.py --model yolov5s-cls.pt --data imagenet --epochs 5 --img 224 --device 0,1,2,3Validate YOLOv5m-cls accuracy on the ImageNet-1k validation set:

# Download ImageNet validation split (6.3G, 50000 images)

bash data/scripts/get_imagenet.sh --val

# Validate model accuracy

python classify/val.py --weights yolov5m-cls.pt --data ../datasets/imagenet --img 224Use a pretrained YOLOv5s-cls model to classify an image:

# Classify an image

python classify/predict.py --weights yolov5s-cls.pt --data data/images/bus.jpg# Load model via PyTorch Hub

# model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s-cls.pt")Export trained classification models (YOLOv5s-cls, ResNet50, EfficientNet-B0) to ONNX and TensorRT formats:

# Export models for deployment

python export.py --weights yolov5s-cls.pt resnet50.pt efficientnet_b0.pt --include onnx engine --img 224Get started quickly with our verified environments. Click the icons below for setup details.

We welcome your contributions! Making contributions to YOLOv3 should be easy and transparent. Please refer to our Contributing Guide for instructions on getting started. We also encourage you to fill out the Ultralytics Survey to share your feedback. A huge thank you to all our contributors!

Ultralytics provides two licensing options to suit different needs:

- AGPL-3.0 License: Ideal for students, researchers, and enthusiasts, this OSI-approved open-source license encourages open collaboration and knowledge sharing. See the LICENSE file for details.

- Enterprise License: Tailored for commercial applications, this license allows the integration of Ultralytics software and AI models into commercial products and services, bypassing the open-source requirements of AGPL-3.0. For commercial use cases, please contact us via Ultralytics Licensing.

For bug reports and feature requests related to YOLOv3, please visit GitHub Issues. For general questions, discussions, and community interaction, join our Discord server!