This is the 2nd place solution of ECCV 2020 workshop VIPriors Image Classification Challenge.

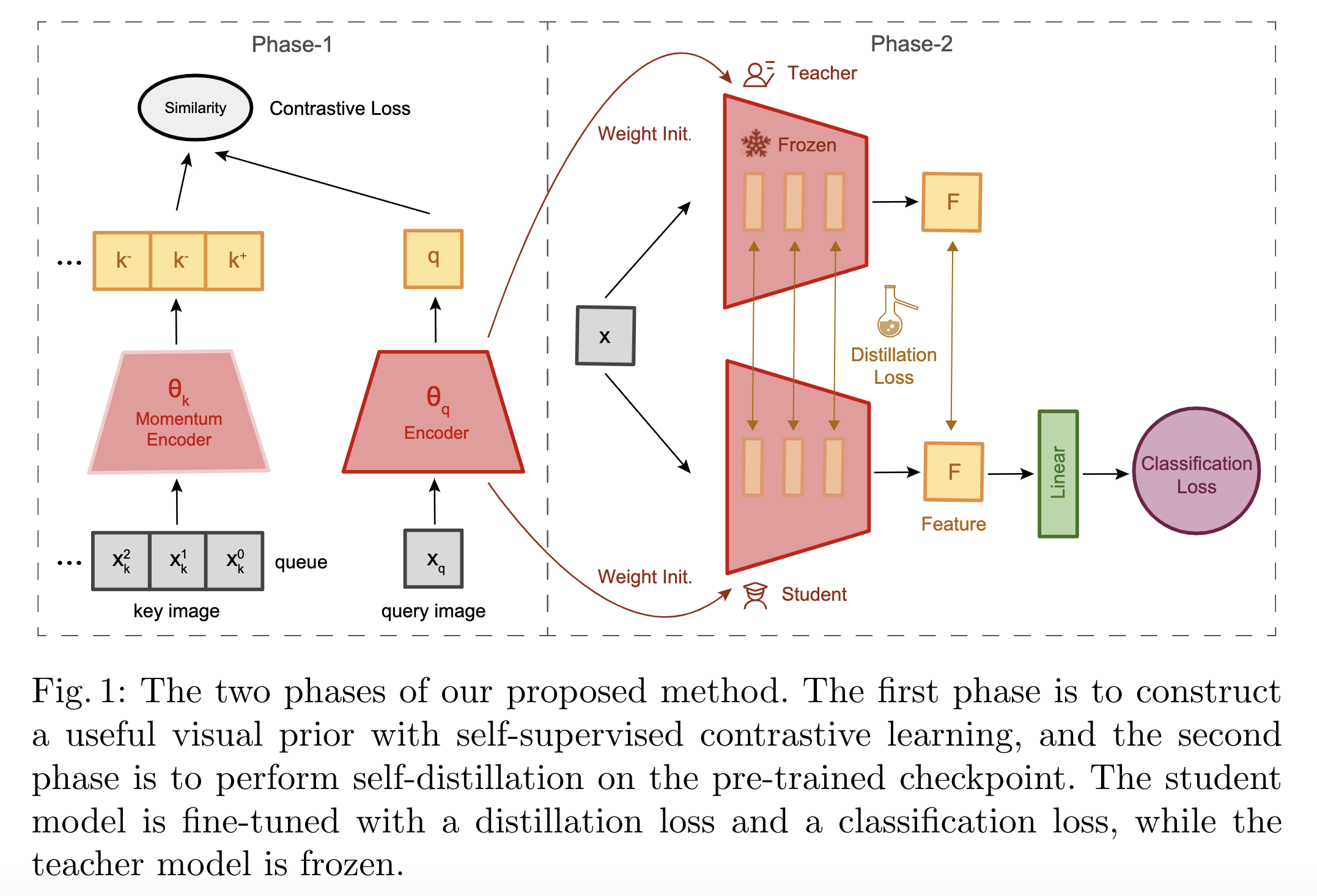

The two phases of our proposed method. The first phase is to construct a useful visual prior with self-supervised contrastive learning, and the second phase is to perform self-distillation on the pre-trained checkpoint. The student model is trained with a distillation loss and a classification loss, while the teacher model is frozen.

Our solution presents a two-phase pipeline, and we only use the provided subset of ImageNet, no external data or checkpoint is used in our solution.

Self-supervised pretraining.

Please follow the instructions in the moco folder.

Self-distillation and classification finetuning.

cd sup_train_distill

python3 train_selfsup.py --data_path /path/to/data/ --net_type self_sup_r50 --input-res 448 --pretrained /path/to/unsupervise_pretrained_checkpoint --save_path /path/to/save --batch_size 256 --autoaug --label_smoothPlease consider citing our paper in your publications if the project helps your research. BibTeX reference is as follow.

@inproceedings{zhao2020distilling,

title={Distilling visual priors from self-supervised learning},

author={Zhao, Bingchen and Wen, Xin},

booktitle={European Conference on Computer Vision},

pages={422--429},

year={2020},

organization={Springer}

}

Bingchen Zhao: zhaobc.gm@gmail.com