-

Notifications

You must be signed in to change notification settings - Fork 4.9k

Description

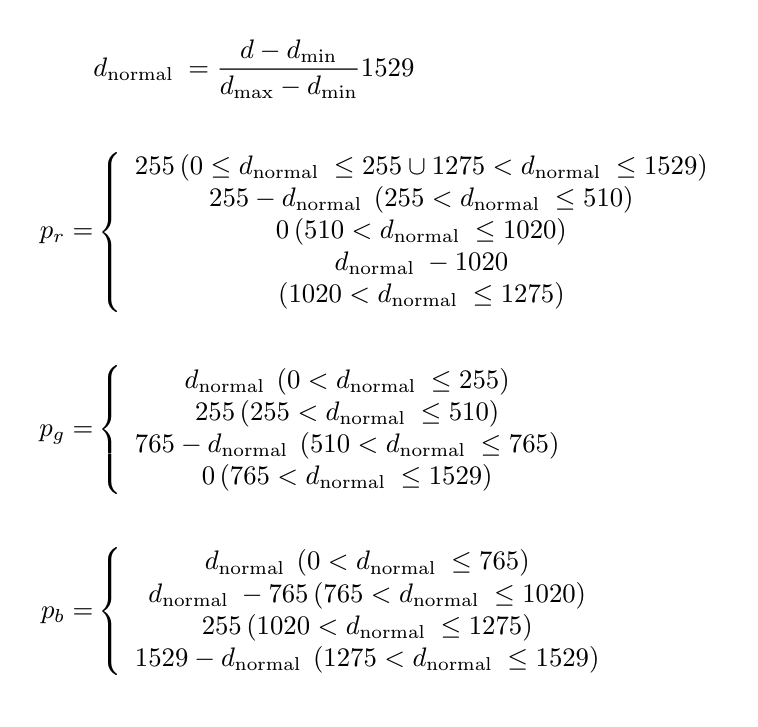

Intel has posted the following algorithm for depth compression: https://dev.intelrealsense.com/docs/depth-image-compression-by-colorization-for-intel-realsense-depth-cameras

After a quick scan, this seems to have the best recovered PSNR to algorithm complexity ratio, so it seems extremely important to make sure this post is usable.

I've found two critical issues with the formula presented (attached below). First, there are clear counterexamples that do not result in an invertible transformation. In fact, I'm actually not sure when it DOES work. So I assume there is a critical issue in the posted formula. Consider the case where:

depth = 5

d_min = 1

d_max = 100

This gets mapped to the RGB value (255, 61, 61), which then gets mapped back to the depth value of 0 instead of 5.

The second issue is that the algorithm is only partially defined for a normalized depth value of 0. The r value has a valid value, but not the green and blue values, since the latter two have strict inequalities in their formulas.

The paper claims that the encoder is implemented in the librealsense SDK, but I am not able to find it. Could somebody please try and find the discrepancy between the blog post and the presumably correct SDK implementation?