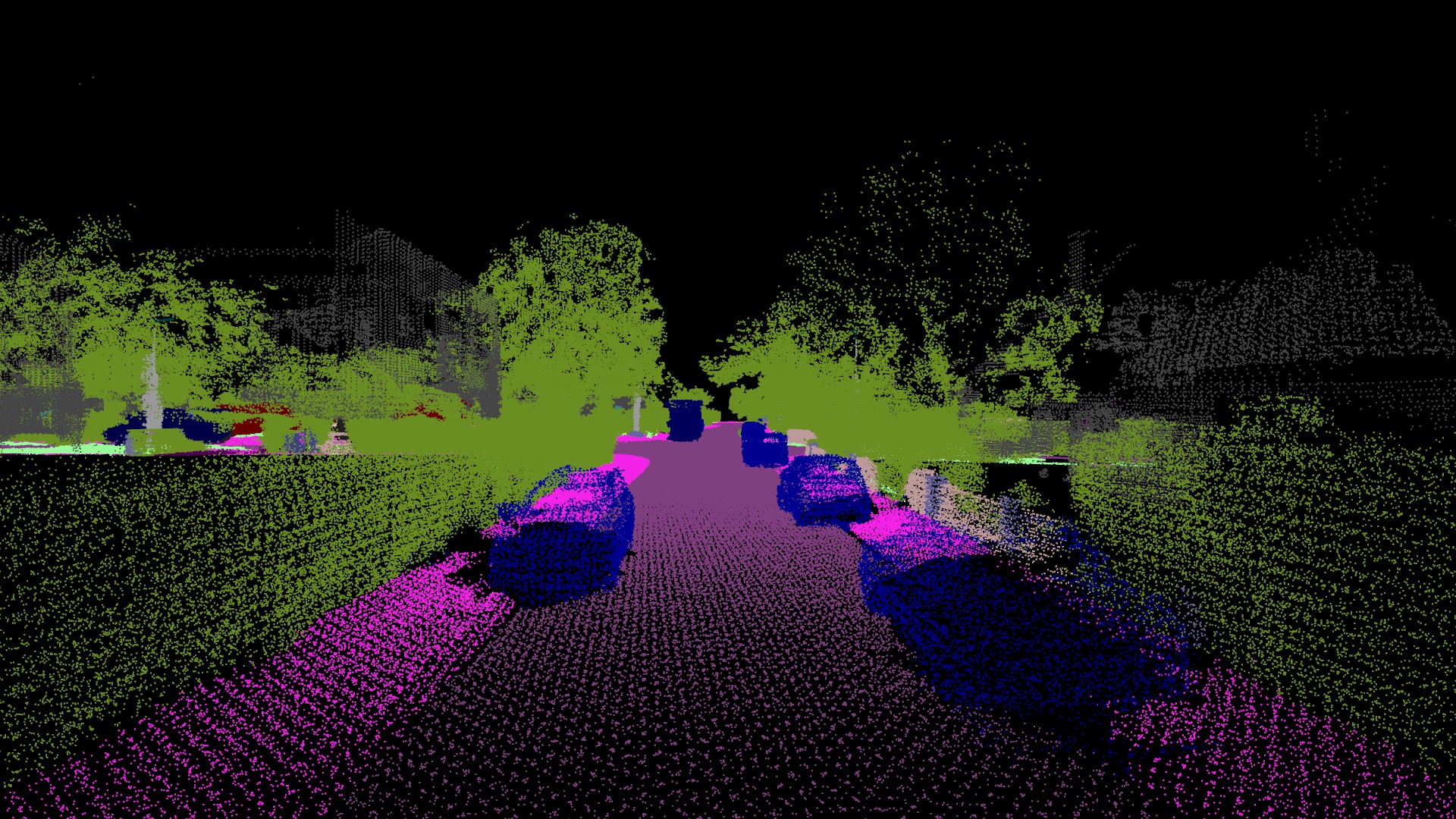

Final project developed for the UPC-AIDL postgraduate course. The main objective is to create a deep learning model able to perform semantic segmentation over a given urban-dense point cloud.

For detailed information about this project, check out the documentation folder. We strongly recommend reading it.

First of all, we need to execute these commands in order to install the repository locally.

1.- Download the repository:

git clone git@github.com:arnauruana/semantic-segmentation-3d.gitNote that the previous command uses the

sshprotocol. You can also usehttpsif you don't have any linked ssh key.

2.- Enter the downloaded folder:

cd semantic-segmentation-3dIf you don't want to use conda environments you can skip this section, otherwise you will need to download it manually from here.

Once installed, you can run the following commands to set up the execution environment.

1.- Create the environment:

conda create --name aidl-project python=3.11 --yes2.- Activate the environment:

conda activate aidl-project3.- Install the Python requirements from the next section.

Unless you followed the previous instructions, you must install Python (either manually from this link or using your favorite package manager).

sudo pacman -S python --needed --noconfirmPlease, note that the previous command will only work on Arch-based Linux distributions and, most likely, you will need to adapt it for yours.

In both cases, you need to execute this line to install all the Python requirements:

pip install -r requirements.txtThe original dataset, formally called IQmulus & TerraMobilita Contest, is a public dataset containing a point cloud from a

The already filtered, normalized and split version of this dataset can be found in the following link. This is the actual dataset we have worked with.

Once downloaded and placed it under the root directory of this project, you can decompress it using the following command:

unzip data.zipYou can also use any program capable of decompressing files. Such as 7-zip, WinRAR or WinZip among others.

If you prefer the raw data and split them manually you must download this file instead.

We also provide two pre-trained models called pointnet.pt and graphnet.pt. You can download them from this link.

Once downloaded and placed the file under the root directory of this project, you can decompress it using the following command:

unzip models.zipIf you haven't downloaded the already processed and split version of the data and you want to manually do it, use this command:

python src/split.pyAdditionally inside the source code, there are two submodules that can be executed independently from each other depending on the model you want to use.

1.- Train the model:

python src/pointnet/train.py2.- Test the model:

python src/pointnet/test.py3.- Infer the model:

python src/pointnet/infer.py1.- Train the model:

python src/graphnet/train.py2.- Test the model:

python src/graphnet/test.py3.- Infer the model:

python src/graphnet/infer.pyIn order to uninstall the project and all its previously installed dependencies, you can execute these commands:

1.- Uninstall python requirements

pip uninstall -r requirements.txt2.- Remove conda environment.

conda deactivate

conda remove --name aidl-project --all --yes3.- Remove repository:

cd ..

rm -rf semantic-segmentation-3dThis project is licensed under the MIT License. See the LICENSE file for further information about it.

Dataset: IQmulus & TerraMobilita Contest

Paper: Bruno Vallet, Mathieu Brédif, Andrés Serna, Beatriz Marcotegui, Nicolas Paparoditis. TerraMobilita/IQmulus urban point cloud analysis benchmark. Computers and Graphics, Elsevier, 2015, Computers and Graphics, 49, pp.126-133. (https://hal.archives-ouvertes.fr/hal-01167995v1)

Owners:

Supervisors: