(Framework for Adapting Representation Models)

FARM makes cutting edge Transfer Learning for NLP simple. Building upon transformers, FARM is a home for all species of pretrained language models (e.g. BERT) that can be adapted to different domain languages or down-stream tasks. With FARM you can easily create SOTA NLP models for tasks like document classification, NER or question answering. The standardized interfaces for language models and prediction heads allow flexible extension by researchers and easy application for practitioners. Additional experiment tracking and visualizations support you along the way to adapt a SOTA model to your own NLP problem and have a fast proof-of-concept.

- Easy adaptation of language models (e.g. BERT) to your own use case

- Fast integration of custom datasets via Processor class

- Modular design of language model and prediction heads

- Switch between heads or just combine them for multitask learning

- Smooth upgrading to new language models

- Powerful experiment tracking & execution

- Simple deployment and visualization to showcase your model

| Task | BERT | RoBERTa | XLNet | ALBERT |

|---|---|---|---|---|

| Text classification | x | x | x | x |

| NER | x | x | x | x |

| Question Answering | x | x | x | x |

| Language Model Fine-tuning | x | |||

| Text Regression | x | x | x | x |

| Multilabel Text classif. | x | x | x | x |

NEW: Checkout https://demos.deepset.ai to play around with some models

- Full Documentation

- Intro to Transfer Learning (Blog)

- Intro to Transfer Learning & FARM (Video)

- Question Answering Systems Explained (Blog)

- Tutorial 1 (Overview of building blocks): Jupyter notebook 1 or Colab 1

- Tutorial 2 (How to use custom datasets): Jupyter notebook 2 or Colab 2

- Tutorial 3 (How to train and showcase your own QA model): Colab 3

Recommended (because of active development):

git clone https://github.com/deepset-ai/FARM.git cd FARM pip install -r requirements.txt pip install --editable .

If problems occur, please do a git pull. The --editable flag will update changes immediately.

From PyPi:

pip install farm

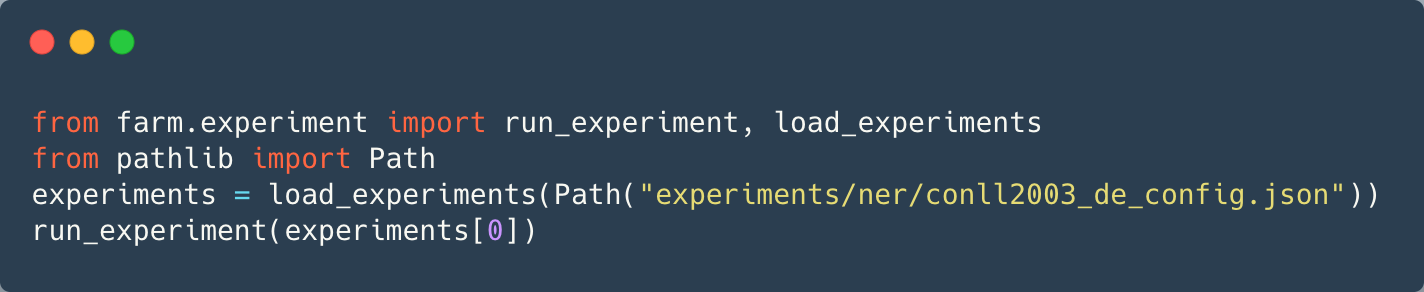

FARM offers two modes for model training:

Option 1: Run experiment(s) from config

Use cases: Training your first model, hyperparameter optimization, evaluating a language model on multiple down-stream tasks.

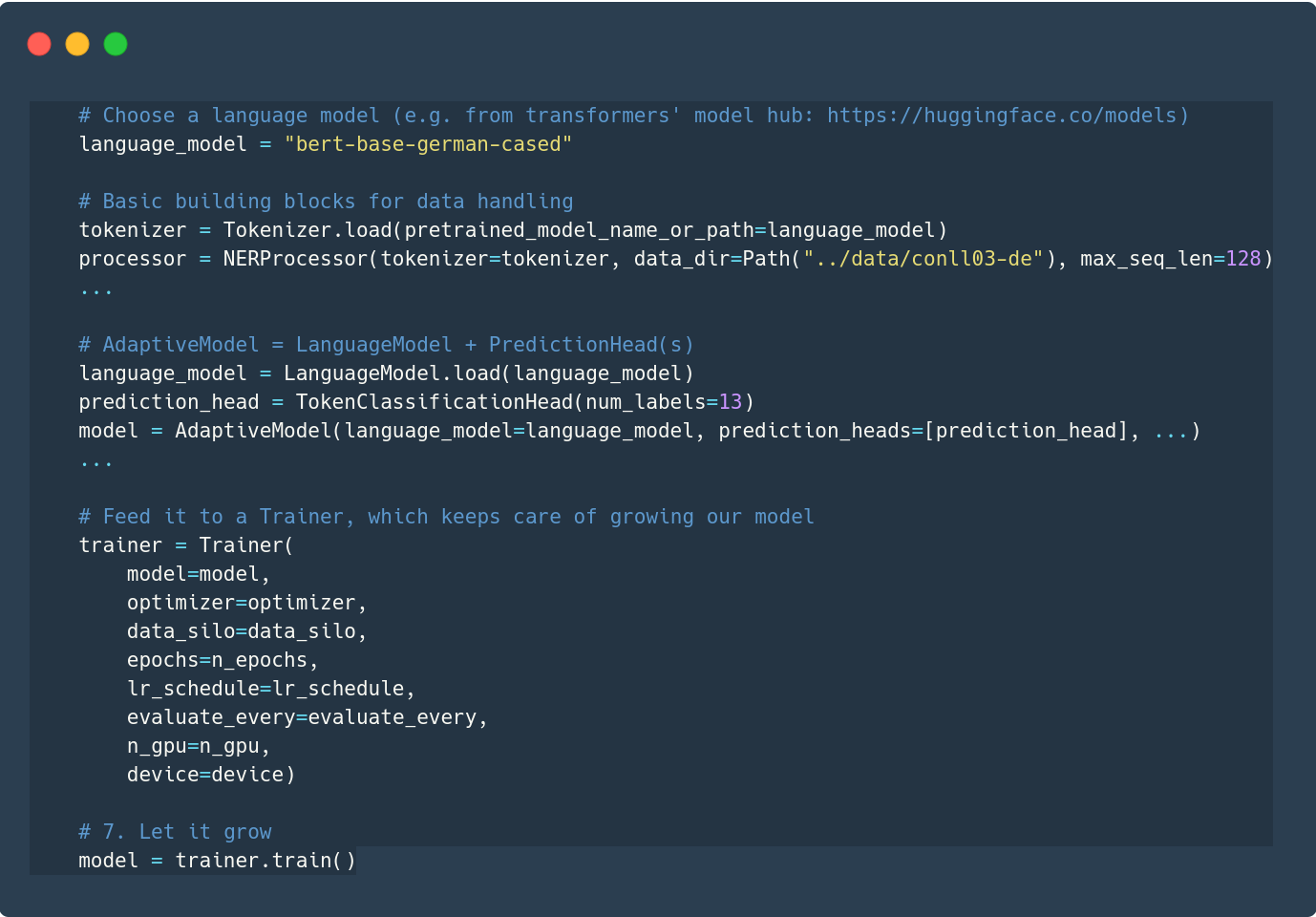

Option 2: Stick together your own building blocks

Usecases: Custom datasets, language models, prediction heads ...

Metrics and parameters of your model training get automatically logged via MLflow. We provide a public MLflow server for testing and learning purposes. Check it out to see your own experiment results! Just be aware: We will start deleting all experiments on a regular schedule to ensure decent server performance for everybody!

- Run

docker-compose up - Open http://localhost:3000 in your browser

One docker container exposes a REST API (localhost:5000) and another one runs a simple demo UI (localhost:3000). You can use both of them individually and mount your own models. Check out the docs for details.

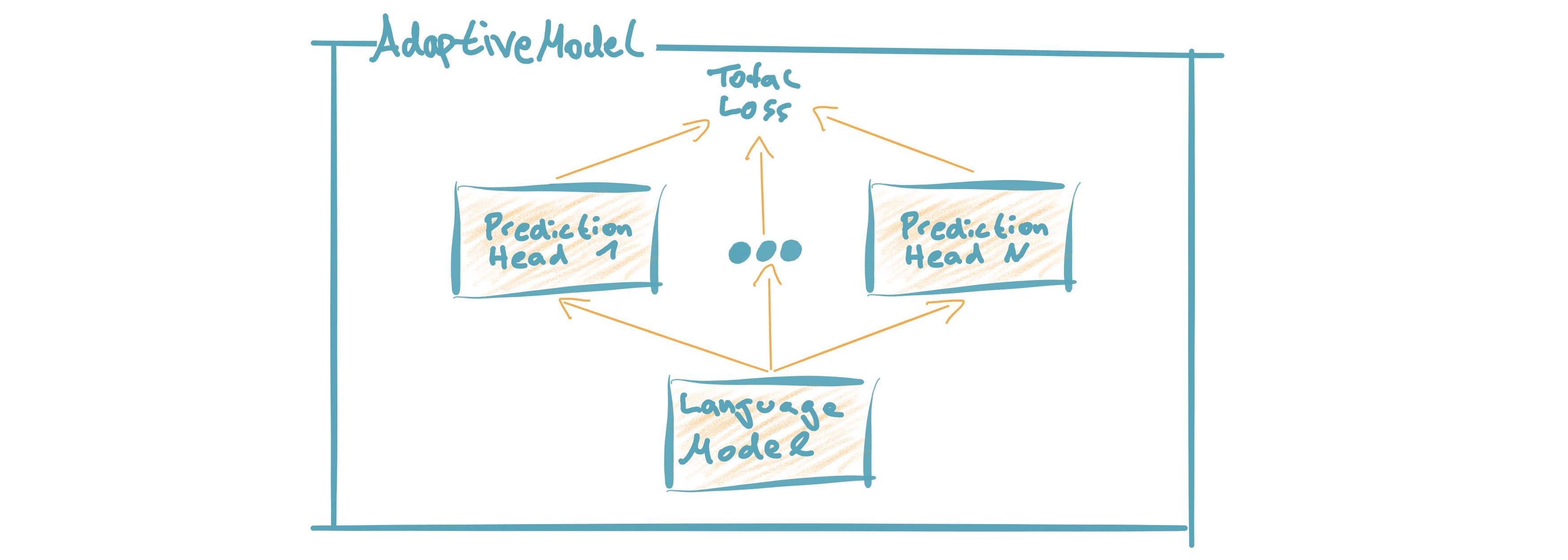

AdaptiveModel = Language Model + Prediction Head(s) With this modular approach you can easily add prediction heads (multitask learning) and re-use them for different types of language model. (Learn more)

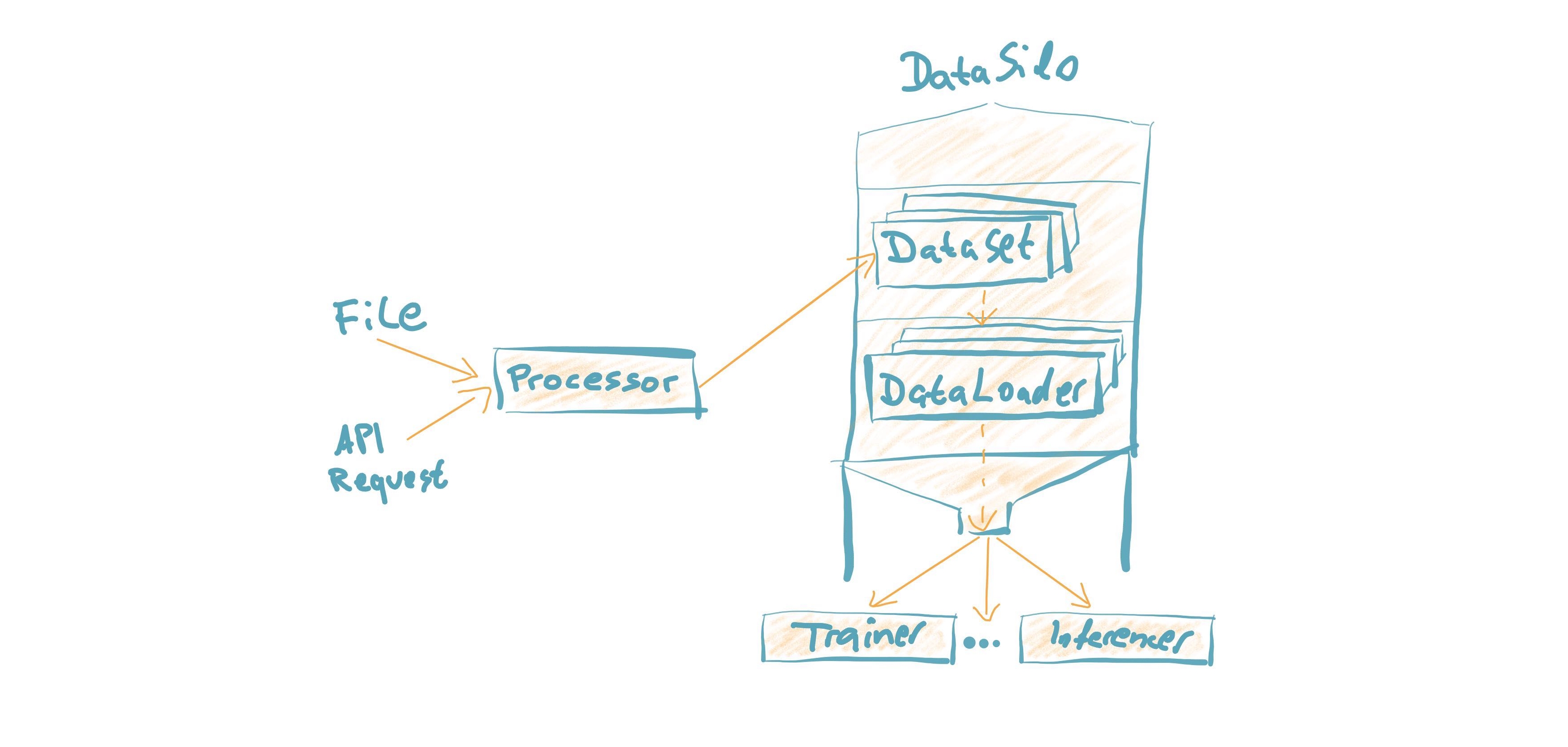

Custom Datasets can be loaded by customizing the Processor. It converts "raw data" into PyTorch Datasets. Much of the heavy lifting is then handled behind the scenes to make it fast & simple to debug. (Learn more)

- AWS SageMaker support (incl. Spot instances)

- Training from Scratch

- Support for more Question Answering styles and datasets

- Additional visualizations and statistics to explore and debug your model

- Enabling large scale deployment for production

- Simpler benchmark models (fasttext, word2vec ...)

- FARM is built upon parts of the great transformers repository from Huggingface. It utilizes their implementations of the BERT model and Tokenizer.

- The original BERT model and paper was published by Jacob Devlin, Ming-Wei Chang, Kenton Lee and Kristina Toutanova.

As of now there is no published paper on FARM. If you want to use or cite our framework, please include the link to this repository. If you are working with the German Bert model, you can link our blog post describing its training details and performance.