Better NER BERT Named-Entity-Recognition

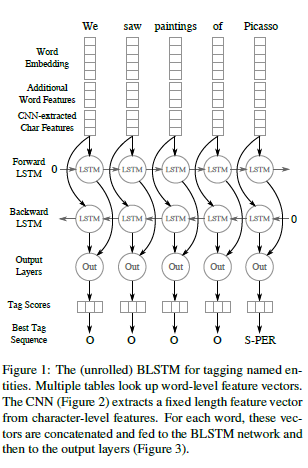

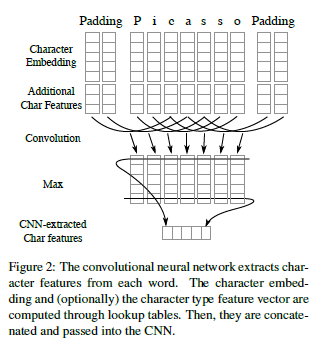

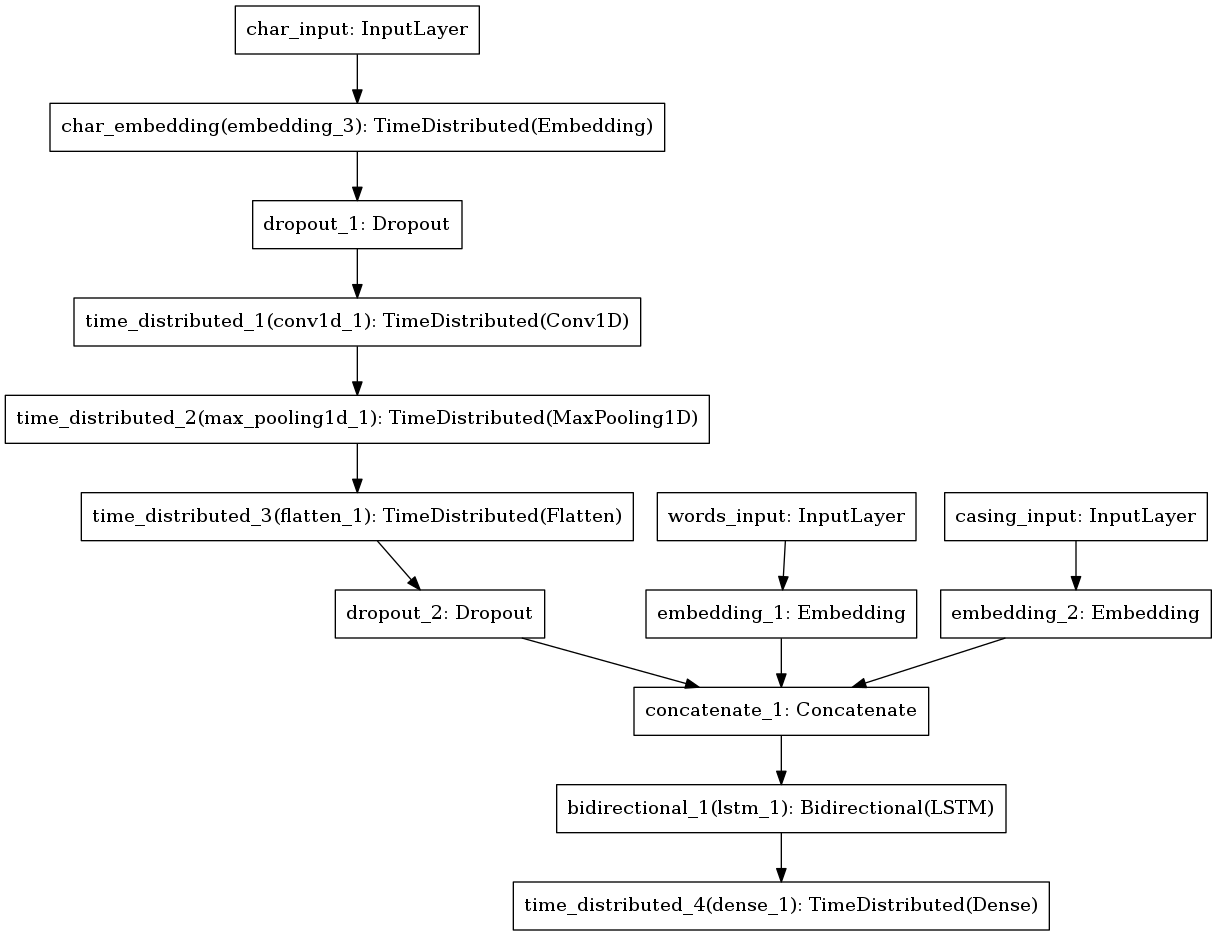

A keras implementation of Bidirectional-LSTM_CNNs for Named-Entity-Recoganition. The original paper can be found at https://arxiv.org/abs/1511.08308

The implementation differs from the original paper in the following ways :

- lexicons are not considered

- Bucketing is used to speed up the training

- nadam optimizer used instead of SGD

The model produces a test F1_score of 90.9 % with ~70 epochs. The results produced in the paper for the given architecture is 91.14 Architecture(BILSTM-CNN with emb + caps)

python3 nn.py0) nltk

1) numpy

2) Keras==2.1.2

3) Tensorflow==1.4.1

from ner import Parser

p = Parser()

p.load_models("models/")

p.predict("Steve Went to Paris")

##Output [('Steve', 'B-PER'), ('went', 'O'), ('to', 'O'), ('Paris', 'B-LOC')]