- Motivation

- Hand Detection

- Detection Output

- Tech Stack

- Functionalities

- To Do and Further Improvements

- Requriements

- Run Locally

- License

- Contributors

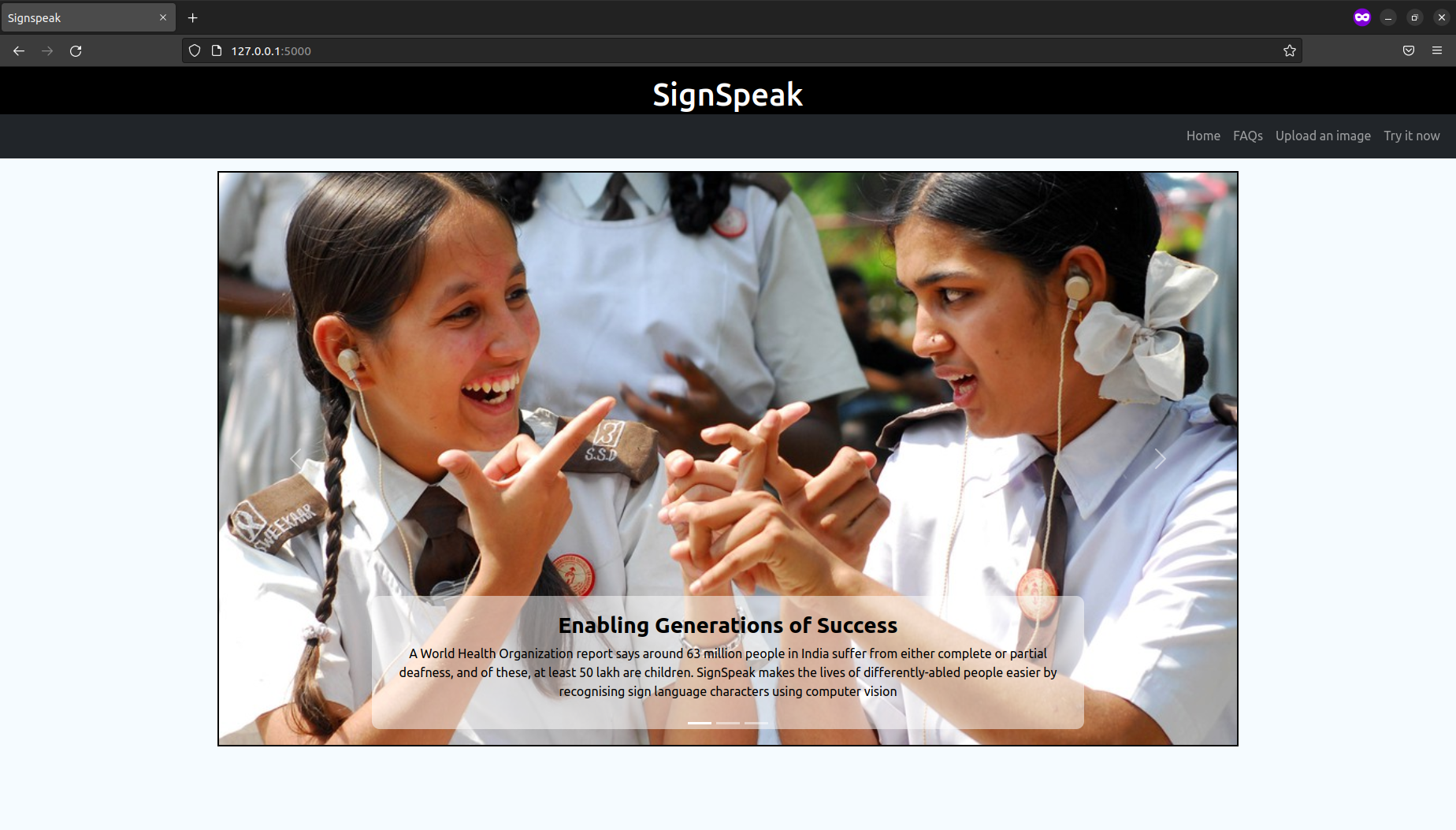

Physically impaired people find it hard to communicate with other humans or latest computing innovations in conversational AI. They normally communicate via sign languages which is often a mix of hand gestures and facial expressions. A computer interface which can interpret their hand gestures from a single camera view and convert it into text or speech transcript would be really beneficial for both parties. We are dealing with static signs for simplicity but a similar concept can be utilized for developing dynamic sign recognition. This system can help in effective human computer interaction for impaired persons which can be utilized for Customer Support, virtual meetings etc.

The crux of this solution lies in identifying the presence of a hand in the video feed/image. This is done using various modules like OpenCV, cvzone and mediapipe.

- HTML, CSS and Bootstrap have been used for the front-end

- Flask has been used as a back-end framework

- The application has been developed using Python

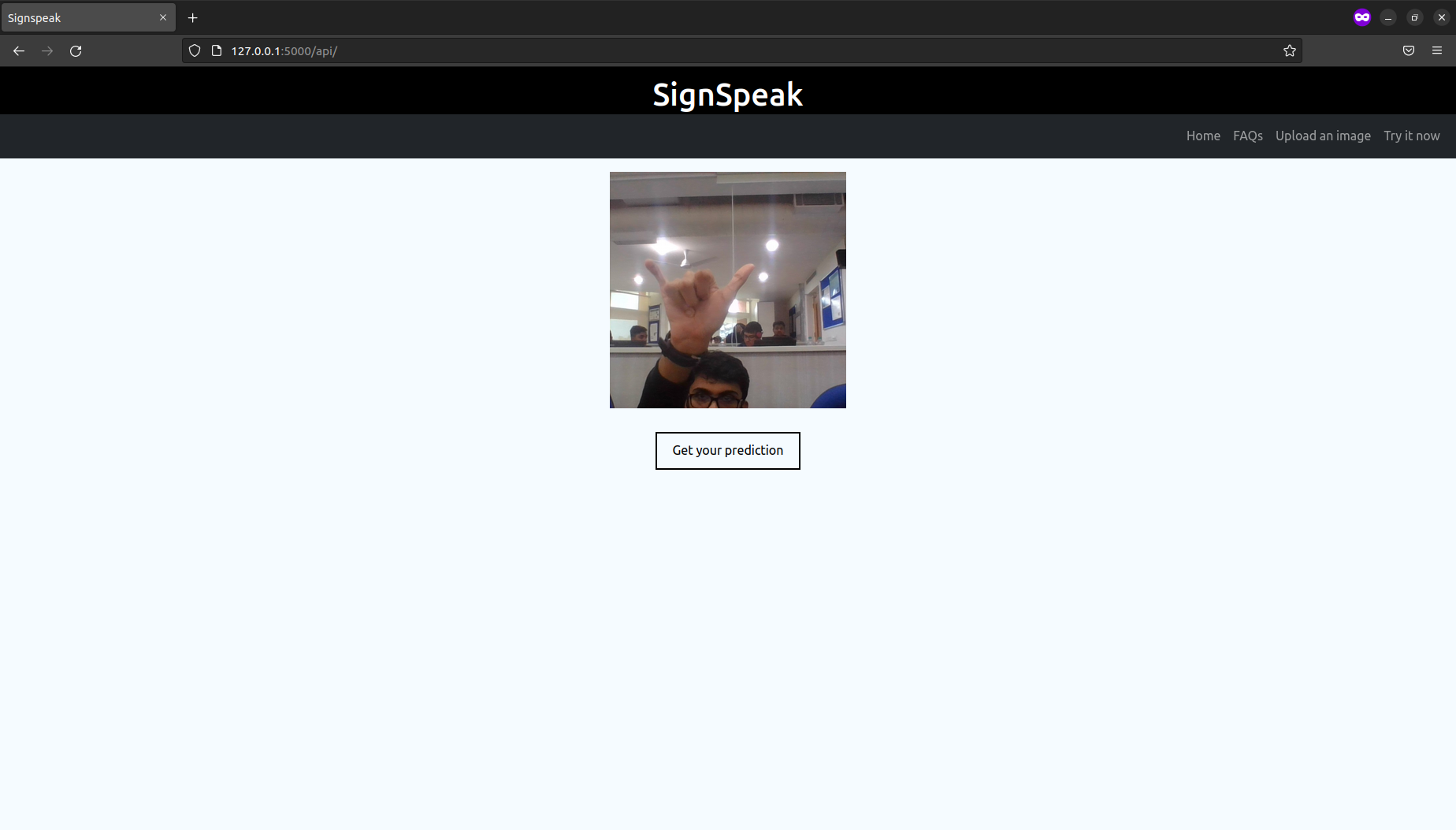

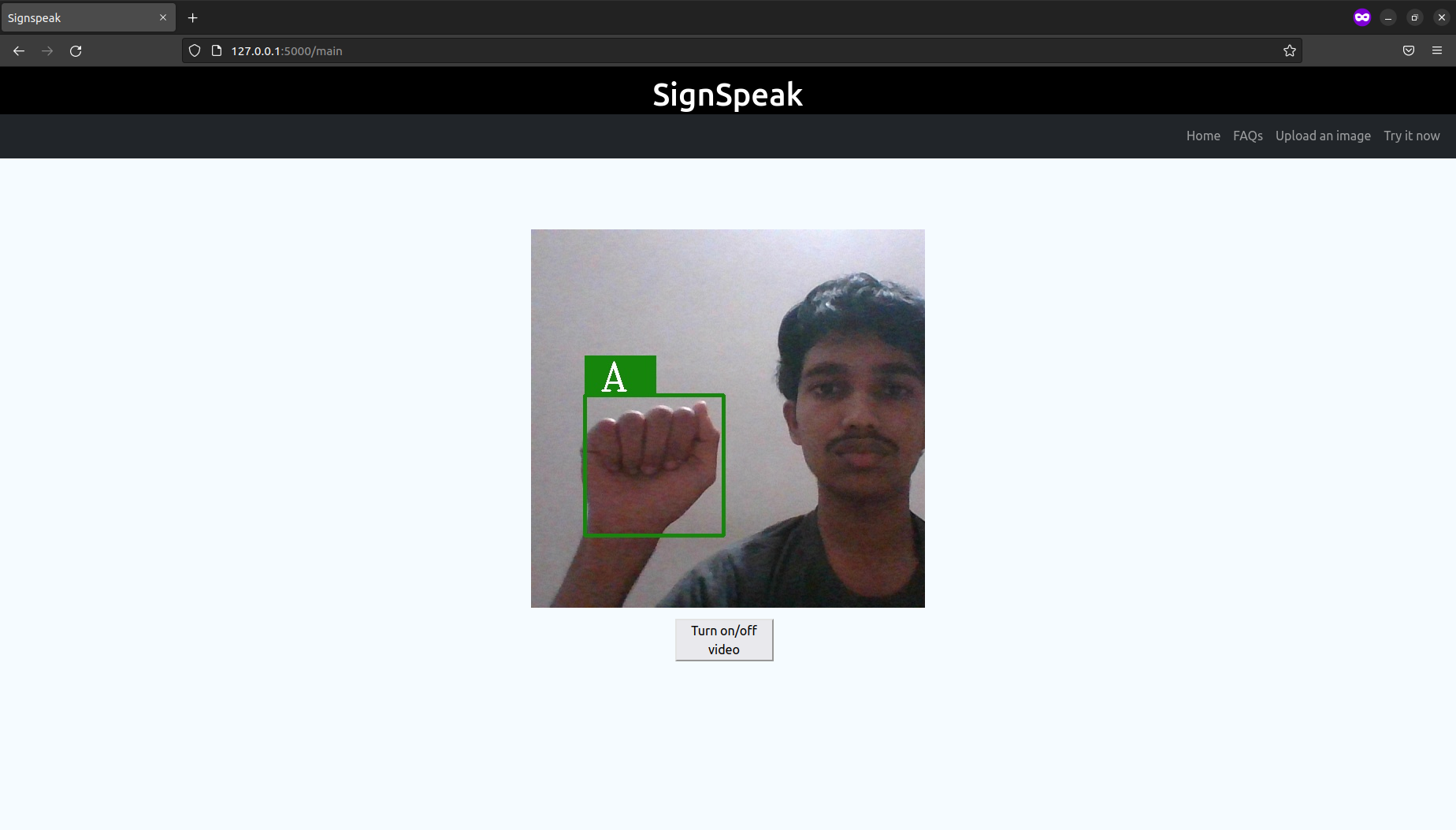

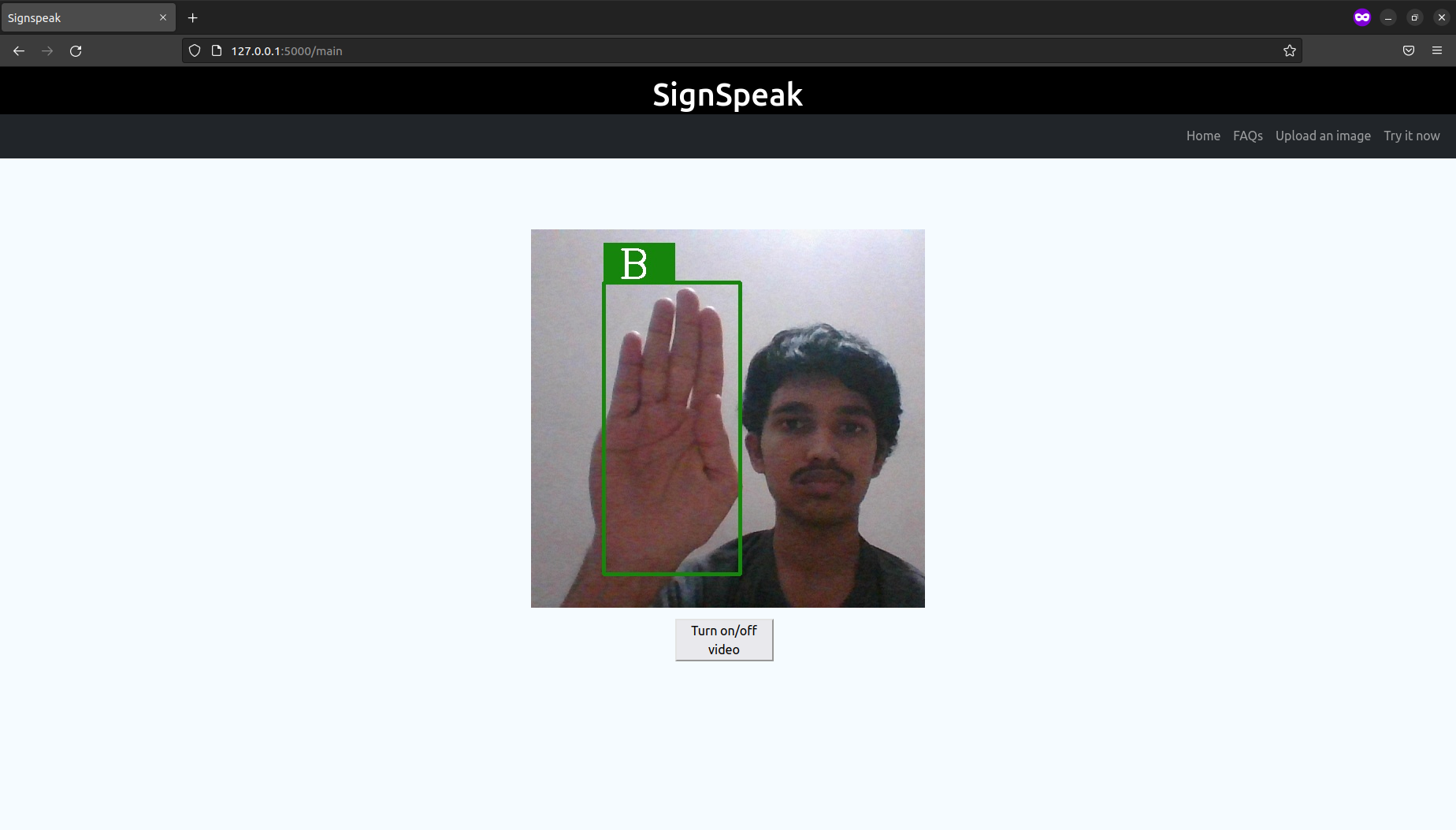

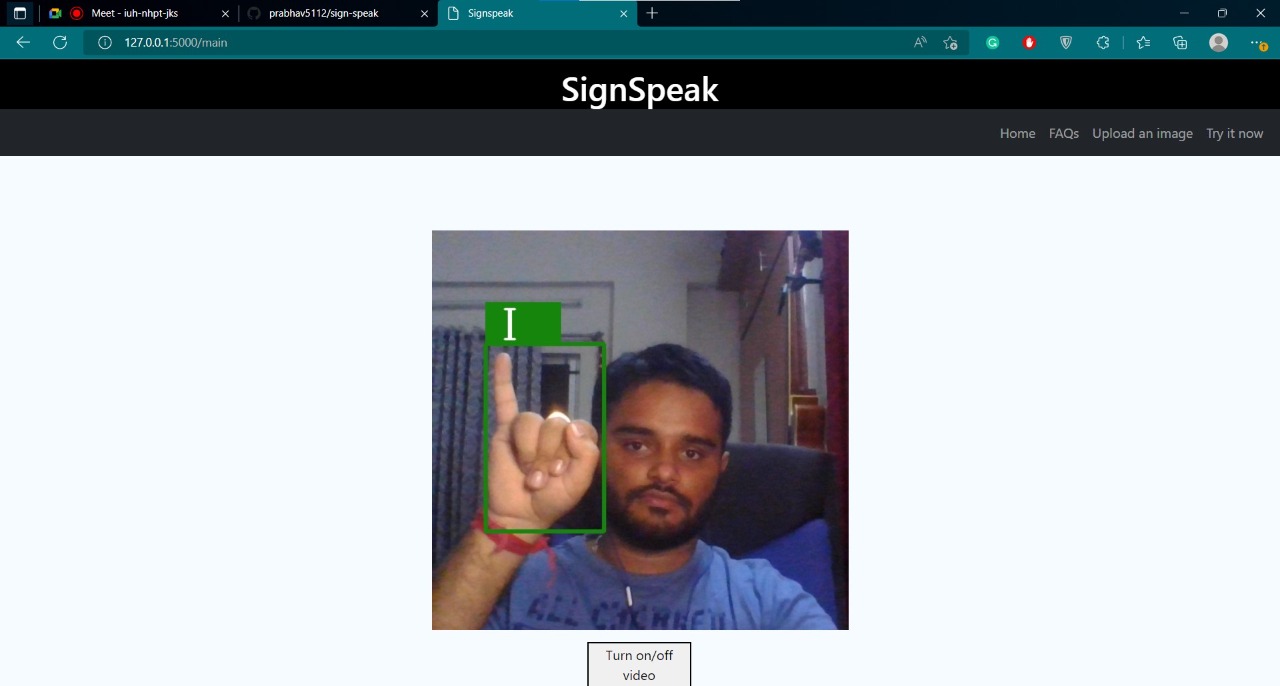

- Detect a hand shown in the video feed/image.

- Draw a box around the hand which has been detected.

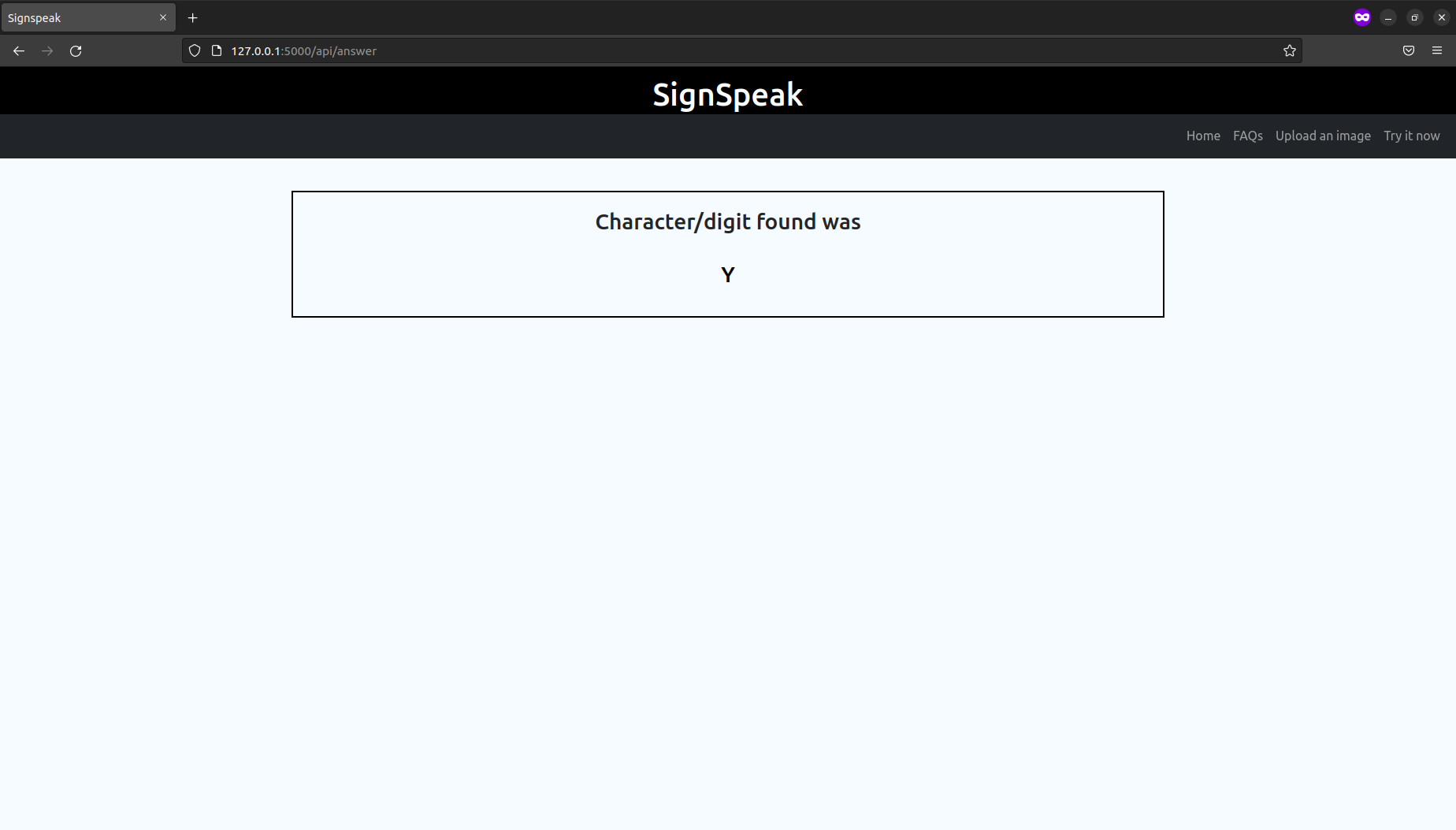

- Display the character/digit recognised.

- Using OpenCV, cvzone and mediapipe for hand detection

- Develop an algorithm which can automatically classify sign language from a video taken from a webcam and convert it to text/speech transcript.

- Display the charcter/digit shown

- Detect and draw a box around a hand (if present) for an image, video/live stream.

- Adding a button to turn on/off video feed

- Updating the simple and minimalistic UI

The following dependencies and modules(python) are required, to run this locally

- opencv-python==4.6.0.66

- flask==2.2.2

- wtforms==3.0.0

- flask_wtf==1.0.1

- tensorflow==2.9.2

- mediapipe

- cvzone

- Clone the GitHub repository

$ git clone git@github.com:prabhav5112/sign-speak.git- Move to the Project Directory

$ cd sign-speak-

Create a Virtual Environment (Optional)

- Install Virtualenv using pip (If it is not installed)

$ pip install virtualenv

- Create the Virtual Environment

$ virtualenv sgnspk

-

Activate the Virtual Environment

- In MAC OS/Linux

$ source sgnspk/bin/activate

- In Windows

$ sgnspk\Scripts\activate

-

Install the requirements

(sgnspk) $ pip install -r requirements.txt- Run the python script main.py

(sgnspk) $ python3 main.py- Dectivate the Virtual Environment (after you are done)

(sgnspk) $ deactivate

This project is under the Apache-2.0 License License. See LICENSE for Details.

Prabhav B Kashyap

|

Sridhar D Kedlaya

|

Imon Banerjee

|