| title | emoji | colorFrom | colorTo | sdk | sdk_version | app_file | pinned | license |

|---|---|---|---|---|---|---|---|---|

KTUGPT |

📚 |

blue |

purple |

gradio |

4.28.3 |

app.py |

false |

mit |

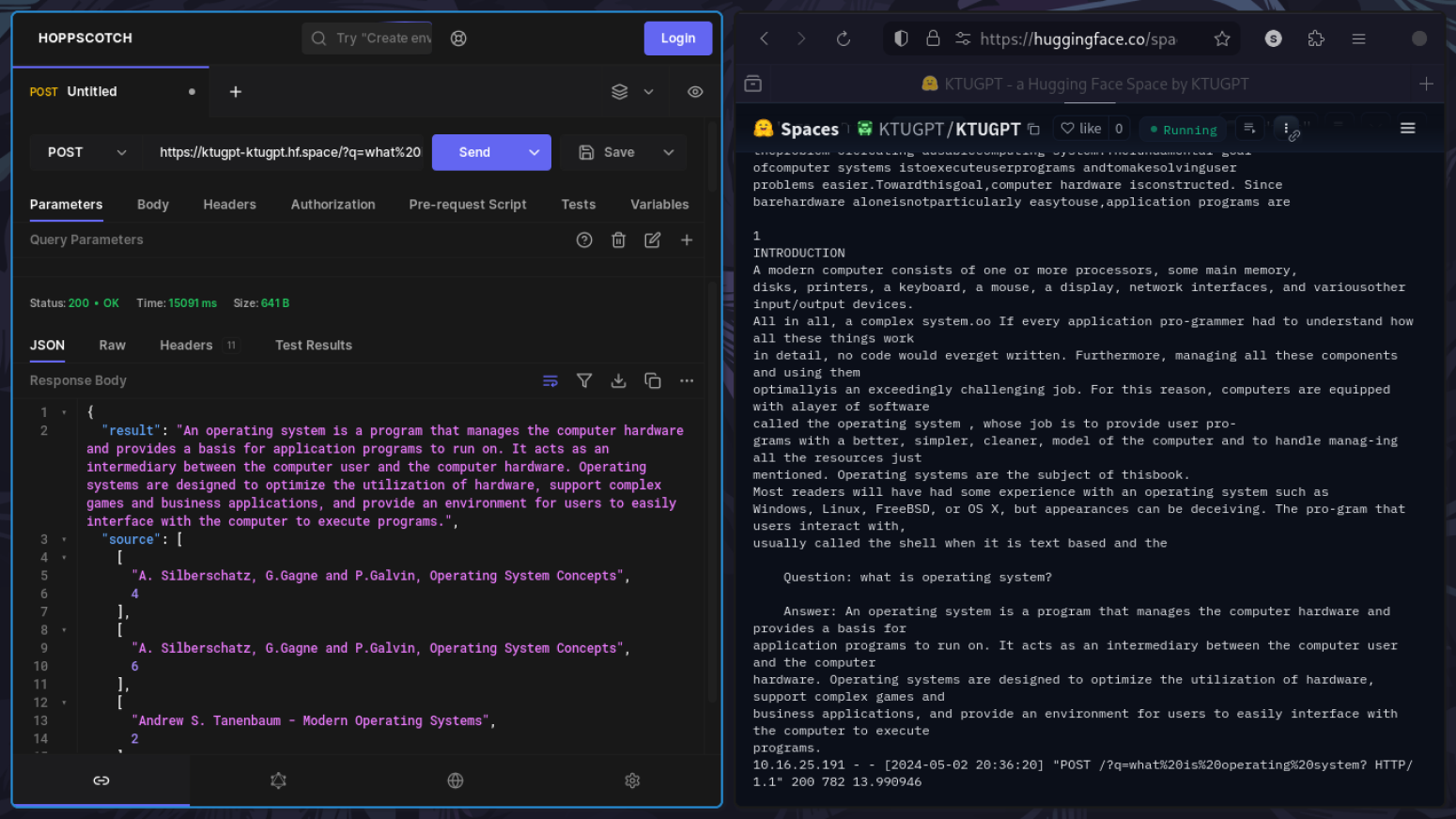

A Flask web application that is designed for answering questions based on the context from the PDFs. It uses the mistralai/Mistral-7B-Instruct-v0.1 model as the large language model (LLM) and the hkunlp/instructor-xl model for embedding text representations.

-

Clone this repository:

git clone https://github.com/sameemul-haque/KTUGPT-Python.git -

After cloning the repository, navigate into the

KTUGPT-Pythondirectorycd KTUGPT-Python -

Set up a Python virtual environment:

python -m venv venv -

Activate the virtual environment:

- GNU/Linux | MacOS:

source venv/bin/activate - Windows:

venv\Scripts\activate

- GNU/Linux | MacOS:

-

Install dependencies:

pip install -r requirements.txt

- Create a

.envfile based on.env.exampleand add your Hugging Face API token and MongoDB Connection String

-

Run the app:

python app.py

Once the Flask app is running, you can send POST requests to http://127.0.0.1:5000 with a query parameter q containing your question. The app will return an answer based on the configured language model and retrieval method. For example, http://127.0.0.1:5000/?q=what%20is%20operating%20system?