The Agency Swarm SDK is a framework for building robust, multi-agent workflows, based on a fork of the lightweight OpenAI Agents SDK. It enhances the base SDK with specific features for defining, orchestrating, and managing persistent conversations between multiple agents.

It is provider-agnostic, supporting the OpenAI Responses and Chat Completions APIs, as well as 100+ other LLMs via the underlying SDK capabilities.

- Agents: LLMs configured with instructions, tools, guardrails. In Agency Swarm,

agency_swarm.Agentextends the base agent, managing sub-agent communication links and file handling. - Agency: A builder class that defines the overall structure of collaborating agents using an

agency_chart. It registers agents, sets up communication paths (via thesend_messagetool), manages shared state (ThreadManager), and configures persistence. agency_chart: A list defining the agents and allowed communication flows within anAgency.- ThreadManager & Persistence: Manages conversation state (

ConversationThread) for each agent interaction. Persistence is handled viaload_callbackandsave_callbackfunctions provided to theAgency. send_messageTool: A specializedFunctionToolautomatically added to agents, enabling them to invoke other agents they are connected to in theagency_chart.- Tools: Standard SDK tools (like

FunctionTool) used by agents to perform actions. - Guardrails: Configurable safety checks for input and output validation (inherited from SDK).

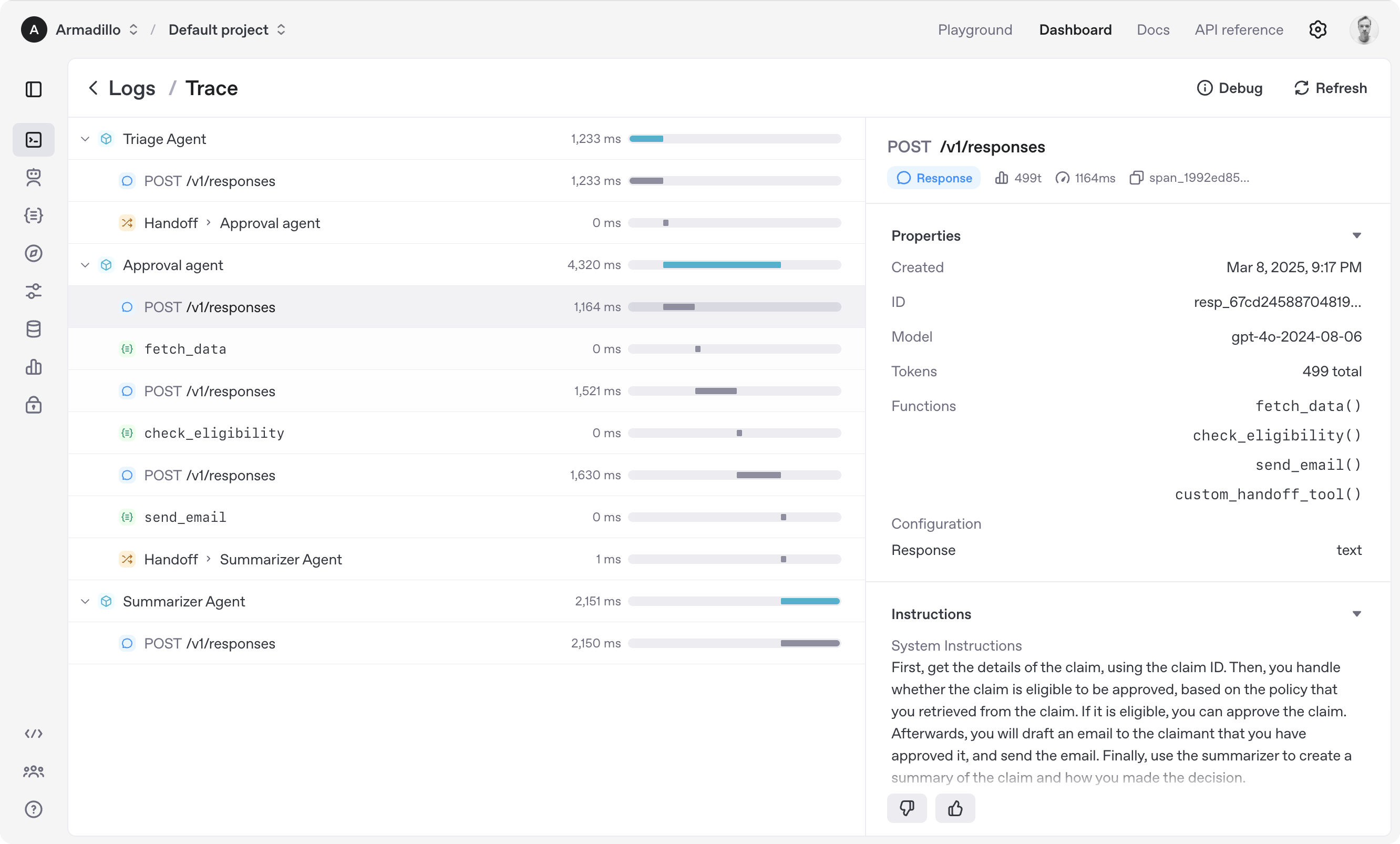

- Tracing: Built-in tracking of agent runs via the SDK, allowing viewing and debugging.

Explore the examples directory to see the SDK in action, and read our documentation (including the Migration Guide) for more details.

- Set up your Python environment

python -m venv env

source env/bin/activate- Install Agency Swarm SDK

# TODO: Update with actual package name

pip install agency-swarm-sdk# Demonstrates basic SDK Agent usage

from agents import Agent, Runner # Use base SDK components

agent = Agent(name="Assistant", instructions="You are a helpful assistant")

result = Runner.run_sync(agent, "Write a haiku about recursion in programming.")

print(result.final_output)

# Code within the code,

# Functions calling themselves,

# Infinite loop's dance.(If running this, ensure you set the OPENAI_API_KEY environment variable)

import asyncio

from agency_swarm import Agent, Agency

from agency_swarm.thread import ThreadManager, ConversationThread # Import thread components

from typing import Optional

import uuid

# Define two agents

agent_one = Agent(

name="AgentOne",

instructions="You are agent one. If asked about the weather, send a message to AgentTwo.",

# tools=[...] # send_message is added automatically by Agency if needed

)

agent_two = Agent(

name="AgentTwo",

instructions="You are agent two. You report the weather is always sunny.",

)

# Define the agency structure and communication

# AgentOne can talk to AgentTwo

agency_chart = [

agent_one,

agent_two,

[agent_one, agent_two] # Defines communication flow: agent_one -> agent_two

]

# --- Simple In-Memory Persistence Example ---

# (Replace with file/database callbacks for real use)

memory_threads: dict[str, ConversationThread] = {}

def memory_save_callback(thread: ConversationThread):

print(f"[Persistence] Saving thread: {thread.thread_id}")

memory_threads[thread.thread_id] = thread

def memory_load_callback(thread_id: str) -> Optional[ConversationThread]:

print(f"[Persistence] Loading thread: {thread_id}")

return memory_threads.get(thread_id)

# -------------------------------------------

# Create the agency

agency = Agency(

agency_chart=agency_chart,

load_callback=memory_load_callback,

save_callback=memory_save_callback

)

async def main():

chat_id = f"chat_{uuid.uuid4()}"

print(f"--- Turn 1: Asking AgentOne about weather (ChatID: {chat_id}) ---")

result1 = await agency.get_response(

message="What is the weather like?",

recipient_agent="AgentOne", # Start interaction with AgentOne

chat_id=chat_id

)

print(f"\nFinal Output from AgentOne: {result1.final_output}")

# Expected: AgentOne calls send_message -> AgentTwo responds -> AgentOne returns AgentTwo's response

# Output should be similar to: The weather is always sunny.

print(f"\n--- Turn 2: Follow up (ChatID: {chat_id}) ---")

# Use the *same* chat_id to continue the conversation

result2 = await agency.get_response(

message="Thanks!",

recipient_agent="AgentOne", # Continue with AgentOne

chat_id=chat_id

)

print(f"\nFinal Output from AgentOne: {result2.final_output}")

print(f"\n--- Checking Persisted History for {chat_id} ---")

final_thread = memory_load_callback(chat_id)

if final_thread:

print(f"Thread {final_thread.thread_id} items:")

for item in final_thread.items:

print(f"- Role: {item.get('role')}, Content: {item.get('content')}")

else:

print("Thread not found in memory.")

if __name__ == "__main__":

asyncio.run(main())import asyncio

from agents import Agent, Runner, function_tool

@function_tool

def get_weather(city: str) -> str:

return f"The weather in {city} is sunny."

agent = Agent(

name="Hello world",

instructions="You are a helpful agent.",

tools=[get_weather],

)

async def main():

result = await Runner.run(agent, input="What's the weather in Tokyo?")

print(result.final_output)

# The weather in Tokyo is sunny.

if __name__ == "__main__":

asyncio.run(main())When you call Runner.run(), we run a loop until we get a final output.

- We call the LLM, using the model and settings on the agent, and the message history.

- The LLM returns a response, which may include tool calls.

- If the response has a final output (see below for more on this), we return it and end the loop.

- If the response has a handoff, we set the agent to the new agent and go back to step 1.

- We process the tool calls (if any) and append the tool responses messages. Then we go to step 1.

There is a max_turns parameter that you can use to limit the number of times the loop executes.

Final output is the last thing the agent produces in the loop.

- If you set an

output_typeon the agent, the final output is when the LLM returns something of that type. We use structured outputs for this. - If there's no

output_type(i.e. plain text responses), then the first LLM response without any tool calls or handoffs is considered as the final output.

As a result, the mental model for the agent loop is:

- If the current agent has an

output_type, the loop runs until the agent produces structured output matching that type. - If the current agent does not have an

output_type, the loop runs until the current agent produces a message without any tool calls/handoffs.

Agency Swarm uses the Agency class to define and manage groups of collaborating agents. The structure and communication paths are defined in the agency_chart.

- Agent Registration: Agents are automatically registered when the

Agencyis initialized based on the chart. - Communication: Agents communicate using the built-in

send_messagetool, which is automatically added to agents that have permission to talk to others (defined by[SenderAgent, ReceiverAgent]pairs in the chart). - Entry Points: Interactions are typically started by calling

agency.get_response()oragency.get_response_stream(), targeting a specific agent designated as an entry point (though calling non-entry points is possible).

Conversation state is managed per interaction context (identified by chat_id) using ConversationThread objects held by a central ThreadManager.

ConversationThread: Stores the sequence of messages and tool interactions for a specific chat.ThreadManager: Creates, retrieves, and managesConversationThreadinstances.- Persistence Callbacks: The

Agencyaccepts optionalload_callback(thread_id)andsave_callback(thread)functions during initialization. These functions are responsible for loading and savingConversationThreadstate to your desired backend (e.g., files, database). If provided, they are automatically invoked at the start and end of agent runs viaPersistenceHooks.

The Agents SDK is designed to be highly flexible, allowing you to model a wide range of LLM workflows including deterministic flows, iterative loops, and more. See examples in examples/agent_patterns.

The Agents SDK automatically traces your agent runs, making it easy to track and debug the behavior of your agents. Tracing is extensible by design, supporting custom spans and a wide variety of external destinations, including Logfire, AgentOps, Braintrust, Scorecard, and Keywords AI. For more details about how to customize or disable tracing, see Tracing, which also includes a larger list of external tracing processors.

- Ensure you have

uvinstalled.

uv --version- Install dependencies

make sync- (After making changes) lint/test

make tests # run tests

make mypy # run typechecker

make lint # run linter

We'd like to acknowledge the excellent work of the open-source community, especially:

We're committed to continuing to build the Agents SDK as an open source framework so others in the community can expand on our approach.