Attacking End-to-End Autonomous Driving

[ Talk ] [ Video ] [ Code ] [ Paper ]

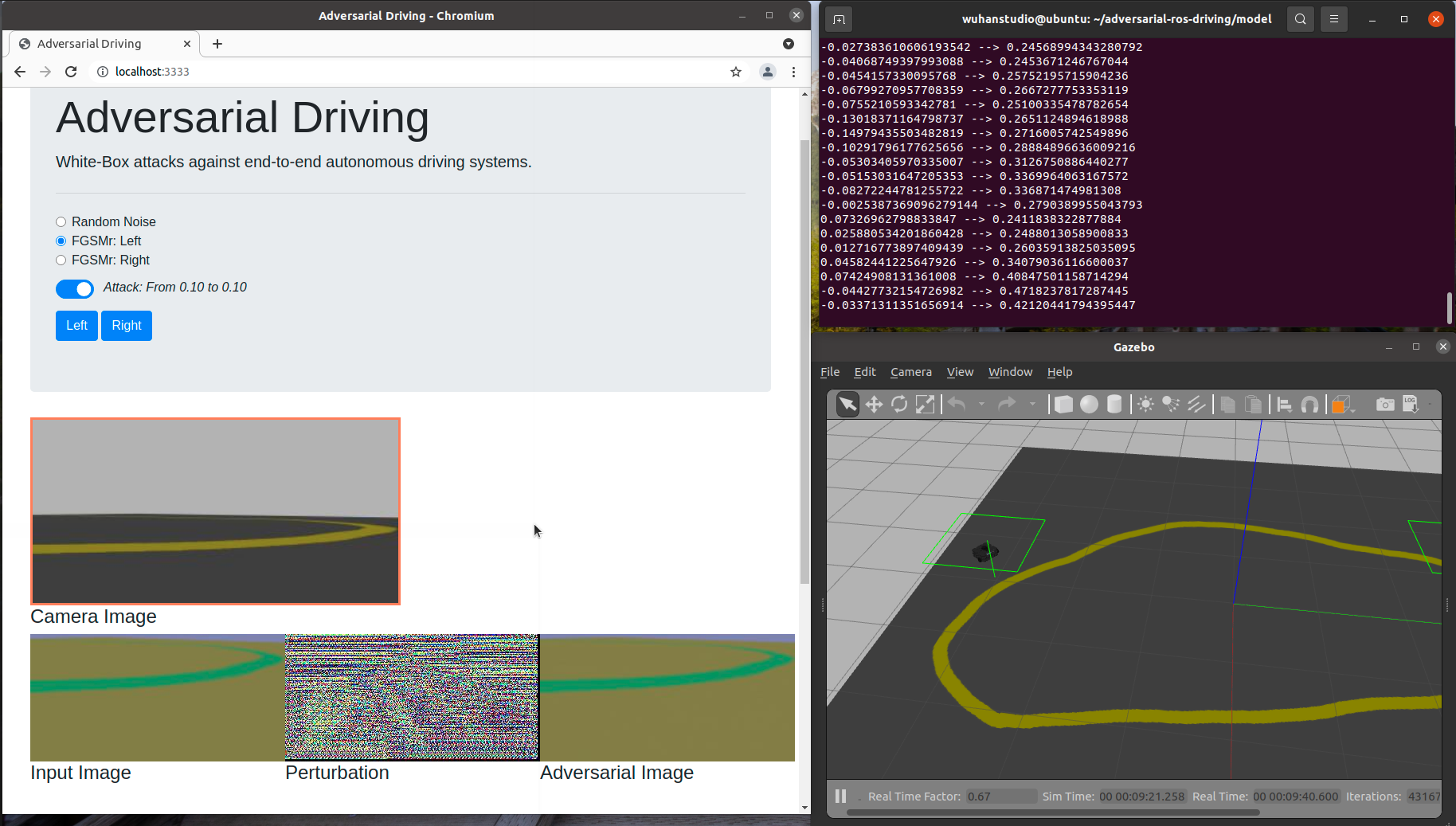

The behaviour of end-to-end autonomous driving model can be manipulated by adding unperceivable perturbations to the input image.

You may use anaconda or miniconda.

$ git clone https://github.com/wuhanstudio/adversarial-driving

$ cd adversarial-driving/model

$ # CPU

$ conda env create -f environment.yml

$ conda activate adversarial-driving

$ # GPU

$ conda env create -f environment_gpu.yml

$ conda activate adversarial-gpu-driving

$ python drive.py model.h5This simulator was built for Udacity's Self-Driving Car Nanodegree, and it's available here (Download the zip file, extract it and run the executable file).

$ cd adversarial-driving/simulator/

$ ./Default\ Linux\ desktop\ Universal.x86_64

Version 1, 12/09/16

Linux Mac Windows 32 Windows 64

Your can use any web server, just serve all the content under client/web.

If you use windows, click on client/client.exe. It's a single executable that packages everything.

For Linux and Mac, or other Unix, the server can be built with:

$ cd adversarial-driving/client

$ go install github.com/gobuffalo/packr/v2@v2.8.3

$ go build

$ ./client

The web page is available at: http://localhost:3333/

We also tested our attacks in ROS Gazebo simulator.

https://github.com/wuhanstudio/adversarial-ros-driving

@INPROCEEDINGS{han2023driving,

author={Wu, Han and Yunas, Syed and Rowlands, Sareh and Ruan, Wenjie and Wahlström, Johan},

booktitle={2023 IEEE Intelligent Vehicles Symposium (IV)},

title={Adversarial Driving: Attacking End-to-End Autonomous Driving},

year={2023},

volume={},

number={},

pages={1-7},

doi={10.1109/IV55152.2023.10186386}

}