Attacking End-to-End Autonomous Driving Systems

[ Talk ] [ Video ] [ Code ] [ Paper ]

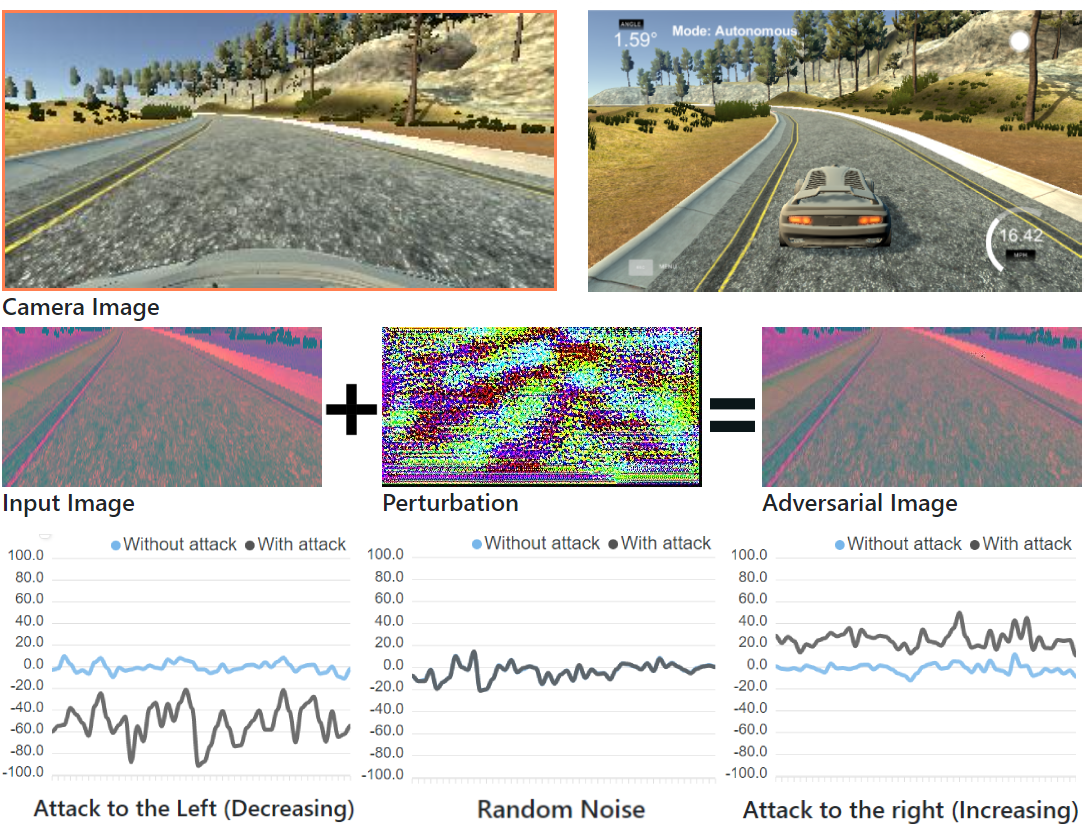

The behaviour of end-to-end autonomous driving model can be manipulated by adding unperceivable perturbations to the input image.

$ sudo apt install ros-noetic-desktop-full

$ sudo apt install ros-noetic-rosbridge-suite ros-noetic-turtlebot3-simulations ros-noetic-turtlebot3-gazebo ros-noetic-teleop-twist-keyboard

$ git clone https://github.com/wuhanstudio/adversarial-ros-driving

$ cd adversarial-ros-driving

$ git clone https://github.com/wuhanstudio/adversarial-driving

$ cd adversarial-driving

$ cd ros_ws

$ rosdep install --from-paths src --ignore-src -r -y

$ catkin_make

$ source devel/setup.sh

$ export TURTLEBOT3_MODEL=waffle

$ roslaunch turtlebot3_lane turtlebot3_lane.launch

# You may need to put the turtlebot on track first

# roslaunch turtlebot3_teleop turtlebot3_teleop_key.launch

$ cd model/

$ # CPU

$ conda env create -f environment.yml

$ conda activate adversarial-driving

$ # GPU

$ conda env create -f environment_gpu.yml

$ conda activate adversarial-gpu-driving

$ # If you use anaconda as your defaut python3 environment

$ pip3 install catkin_pkg empy defusedxml numpy twisted autobahn tornado pymongo pillow service_identity

$ roslaunch rosbridge_server rosbridge_websocket.launch

# For real turtlebot3

$ python3 drive.py --env turtlebot --model model_turtlebot.h5

# For Gazebo Simulator

$ python3 drive.py --env gazebo --model model_gazebo.h5

The web page will be available at: http://localhost:8080/

That's it!

The following script collects image data from the topic /camera/rgb/image_raw and corresponding control command in /cmd_vel. The log file is saved in driving_log.csv, and images are saved in IMG/ folder

$ cd model/data

$ # Collect left camera data

$ python3 line_follow.py --camera left --env gazebo

$ python3 ros_collect_data.py --camera left --env gazebo

$ # Collect center camera data

$ python3 line_follow.py --camera center --env gazebo

$ python3 ros_collect_data.py --camera center --env gazebo

$ # Collect right camera data

$ python3 line_follow.py --camera right --env gazebo

$ python3 ros_collect_data.py --camera right --env gazebo

Once the data is collected, we can train a model that tracks the lane.

$ cd model

$ python3 model.py

We also tested our attacks in Udacity autonomous driving simulator.

https://github.com/wuhanstudio/adversarial-driving

@INPROCEEDINGS{han2023driving,

author={Wu, Han and Yunas, Syed and Rowlands, Sareh and Ruan, Wenjie and Wahlström, Johan},

booktitle={2023 IEEE Intelligent Vehicles Symposium (IV)},

title={Adversarial Driving: Attacking End-to-End Autonomous Driving},

year={2023},

volume={},

number={},

pages={1-7},

doi={10.1109/IV55152.2023.10186386}

}